AI is rapidly transforming the business landscape, and large language models (LLMs) are playing a key role in this transformation. LLMs are a type of AI that is trained on massive datasets of text and code. This allows them to learn the nuances of human language and to generate text that is both coherent and informative.

Meta has released an open-source version of its AI model, Llama 2, for public use. It is available to startups, established businesses, lone operators, and of course the research community.

What are the characteristics of Llama 2?

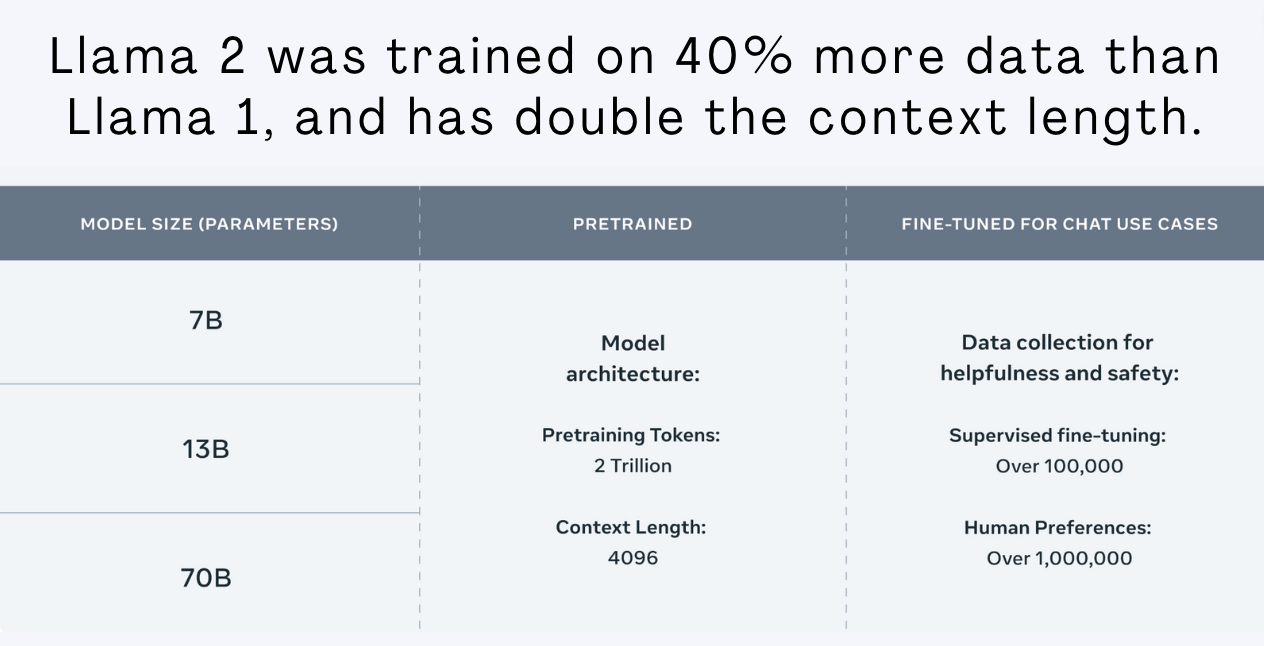

Llama 2 is available in different sizes, including 7B, 13B, and 70B-parameter models. These models can be fine-tuned and deployed easily and safely on multiple platforms such as AWS Sagemaker and Microsoft's Azure platform. It is open source, so anybody can also download it and deploy it anywhere. This gets rid of barriers to the adoption of AI and it also gets rid of data privacy concerns because you don't have to send data to a 3rd party model provider, you can host the model and tune it with your own data and make it your own.

Llama 2 is not the first LLM to be released open source. However, it is one of the most powerful and versatile LLMs available. It has been trained on a massive dataset of text and code, which allows it to generate text that is both coherent and informative.

Llama 2 was already assessed against models like ChatGPT and PaLM using the "win rate" metric. The 70B Llama-2 model performs comparably to GPT-3.5-0301 and surpasses other models in helpfulness for single and multi-turn prompts. However, Llama-2 lacks proficiency in coding, falling behind GPT-3.5, GPT-4, and models specifically designed for coding, such as StarCoder.

Contrastingly, Claude-2 excels in coding, mathematics, and logical tasks, including PDF comprehension, which GPT-4 struggles with. It scored 71.2% on the Codex HumanEval, a Python coding skills evaluation.

Regarding poetic writing, ChatGPT seems more sophisticated and intentional in its word choice, while Llama-2 uses a more straightforward, rhyme-focused approach, reminiscent of high school poetry.

Why is it such a big deal?

It's simple: Llama 2 is open source, free for research and commercial use.

Meta believes that Llama 2 has the potential to be a game changer for AI for business.

Llama 2 potentially poses a significant threat to OpenAI and Hyperscaler-owned GenAI models due to its capabilities and potential for private use. Llama 2's performance is comparable to GPT-4 and Claude-2 and can solve many data security problems that organizations often encounter when entrusting sensitive information to third-party AI hosting companies.

Many clients are worried about data privacy as it's hard to verify the security of data with the existing black-box systems. Issues related to AI-generated misinformation, copyright concerns, liability, libel, inaccuracies, and hallucinations are also prevalent with the current models.

By hosting AI models privately, organizations can better address these issues and meet enterprise security and risk management requirements. The shift towards open-source models like Llama that allow for private hosting is expected to increase, driven by these security, privacy, and risk management requirements.

While implementing privately hosted GenAI models requires new skills, the costs associated with this are predicted to be counterbalanced by the savings from third-party hosting vendor transactions and license fees, as well as the expenses to ensure data confidentiality, security, and privacy.

Even though open-source GenAI models may introduce new security and safety concerns due to hackers and other malicious actors, they provide an initial solution to the ongoing issues faced by enterprise users in the AI space.

Llama 1 already drove crazy innovation in the community. At a much faster pace than anybody expected. The procedures involved in creating and refining open source models are quick, cost-effective, and ideally suited for a globally collaborative style, which is a typical trait of open source projects.

The memo from a leaked email from a Google engineer:

…in terms of engineer-hours, the pace of improvement from these models vastly outstrips what we can do with our largest variants, and the best are already largely indistinguishable from ChatGPT.”

Companies offering closed source models, such as OpenAI, in the long term, could have their moat at risk as long as they keep training with public data. Eventually, everyone will be able to access similar quality models from Open Source.

The efficiency and significantly accelerated pace of innovation inherent to the globally collaborative approach of Open Source is unrivaled. How could a closed-source system possibly stand against the formidable array of engineers from across the globe? It simply cannot.

“We Have No Moat, And Neither Does OpenAI”

I still think OpenAI, Anthoropic, Cohere, and others have a first-mover advantage, incredible AI talent, and a great system to deliver high-quality models at scale. And that has value. Their systems are also widely used and that gives them a lot of data to improve the tools and models in production.

OpenAI and Google have moats in the large language model (LLM) space because they have users interacting with their models consistently. This gives them access to quality and diverse training prompts for fine-tuning, which is a costly and data-intensive process. Open-source efforts will have a tough time replicating this moat, as they will not have the same access to data and engineering infrastructure.

This is what Mark Zuckerberg had to say about Meta's 𝐀𝐩𝐩𝐫𝐨𝐚𝐜𝐡 𝐭𝐨𝐰𝐚𝐫𝐝𝐬 𝐎𝐩𝐞𝐧𝐧𝐞𝐬𝐬 & 𝐀𝐜𝐚𝐝𝐞𝐦𝐢𝐜 𝐄𝐱𝐜𝐞𝐥𝐥𝐞𝐧𝐜𝐞 𝐢𝐧 𝐀𝐈 𝐃𝐞𝐯𝐞𝐥𝐨𝐩𝐦𝐞𝐧𝐭:

- Meta's mission is to collaborate with the world's best researchers to pioneer AI technology.

- While training state-of-the-art models is largely exclusive to major companies due to high costs, Meta is committed to making the process more efficient and accessible.

- The emphasis is on community learning and fostering innovation, with open-source providing security through collective scrutiny.

Interview with Mark Zuckerberg a month before the release of Llama 2

What are the benefits for Meta in open sourcing Llama 2?

The development of Llama 2 likely incurred Meta a cost exceeding 20 million USD. Why would they open-source it to everyone?

Leveraging the 'wisdom of crowds' significantly improves and safeguards AI systems. It also removes the exclusive control that large tech companies hold, as currently, they're the only ones possessing the requisite computational power and substantial data reservoirs needed to build these models.

Meta's move is a strategic step to diffuse the competitive advantage of its tech counterparts such as Google. By offering everyone the opportunity to launch a competitor to bots like ChatGPT or Bard, the playing field may be more leveled.

Big tech companies often open source their software for several reasons:

- Community Contribution and Innovation: When software is open-sourced, a community of developers worldwide can contribute to its development. This can lead to faster innovation and more robust software as bugs are identified and fixed, and new features are proposed and implemented more quickly than they might be by the in-house team alone.

- Standardization: By open-sourcing certain technologies, companies can drive industry standards. This can be particularly advantageous in newer, emerging fields where standards are still being established. Companies that manage to set these standards can shape the industry and gain significant influence.

- Recruitment and Brand Image: Open source projects can also serve as a showcase for a company's technological prowess, attracting top talent who want to work on cutting-edge technologies. This can also improve the company's brand image and reputation within the community.

- Interoperability and Compatibility: Open-source software is often more adaptable and can be more easily integrated with other systems. This can be especially useful in today's tech landscape where many different systems need to work together seamlessly.

- Cost Reduction: Open-sourcing software can help companies reduce costs. The community of AI developers contributes to the maintenance and development of the software, decreasing the resources the company has to invest.

- Ecosystem Creation: By open-sourcing their technology, companies can create an entire ecosystem around their products. This can lead to the development of related tools, libraries, and technologies, further strengthening the position of the original software.

While the software is given away for free, these indirect benefits often translate into substantial returns for the company. To this point how Meta is benefiting from releasing their Llama large language model for research and now commercial is that they now have thousands of people doing their work and driving innovation for free.

Meta introduced a wide array of supporters worldwide who share the same enthusiasm for the open approach to modern AI. These include companies that have provided early feedback and are eager to build using Llama 2, cloud service providers planning to incorporate the model into their customer offerings, researchers devoted to conducting studies using the model, and individuals from the technology sector, academia, and policy-making who recognize the advantages of Llama.

The open source LlaMA AI will allow us to create much smaller, faster, and more energy efficient yet still powerful models. - Head of AI at Orange

How Llama 2 is pushing for more Open Source innovation

Open Source is contagious. Embrace it or you will be left behind. And that means, consuming open source but also contributing to open source. Now the community and big tech companies will accelerate their release of new contributions. And it is already happening.

This same week we had the following announcements in the open-source community:

- MosaicML launches MPT-7B-8K, a 7B-parameter open-source LLM with 8k context length --> link

- Scale AI launched LLM Engine, to Fine-tune open-source large language models for improved performance on your most important use cases. --> link

- Langchain is introducing a new platform named LangSmith, which will enable developers to inspect, examine, test, and supervise their LLM applications all in one location. --> link

Llama 2 will make Foundation Models more accessible to everyone

The AI infrastructure cost to run LLMs is influenced by a variety of factors such as model parameters, GPU compute power, training dataset sizes, and the demand for GPU capacity, all of which have seen significant growth in recent years. The relationship between optimal parameters and training data size is a widely accepted concept, but expanding the training dataset beyond what is currently used (Common Crawl, Wikipedia, books) may prove challenging.

GPU performance continues to increase, albeit at a slower rate, due to constraints like power, I/O, and the depletion of easily attainable optimizations. Despite this, an increase in demand for compute capacity is expected as the AI industry expands and the number of AI developers grows, leading to a greater need for GPUs, especially for testing during the model development phase.

Concerns have been raised regarding whether the high AI infrastructure costs may form a barrier for new entrants competing against well-funded incumbents. However, the rapid changes seen in these markets, facilitated by open source models such as Alpaca or Stable Diffusion, and now Llama 2 suggests a still fluid landscape.

Over time, it is posited that the cost structure of the emerging AI software stack may come to resemble the traditional software industry.

This transition would be advantageous, fostering vibrant ecosystems with rapid innovation and abundant opportunities for entrepreneurial minds, as has been observed historically in the traditional software industry.

How companies can start using Llama 2 TODAY!

Llama is available to download here:

There is a form to enable access to Llama 2. Please visit the Meta website and accept the license terms and acceptable use policy before submitting this form. Requests are usually processed in 1-2 days.

- Use Llama 2 on AWS Sagemaker: Users can readily test and implement Llama 2 models through SageMaker JumpStart, a hub offering a variety of algorithms, models, and machine learning solutions for expedited entry into the realm of machine learning.

- Use Llama 2 on Azure AI Studio: In the Microsoft Inspire event, Meta and Microsoft declared support for Llama 2 LLMs on Azure and Windows. This partnership allows Azure customers to fine-tune and deploy Llama 2 models, and Windows developers to incorporate them in their applications through the ONNX Runtime via DirectML.

- Use Llama 2 on Hugging Face: available in the Model Hub and with all the popular Hugging Face libraries to inferences, fine-tune and play with the model.

- Responsible use guide: a helpful tool for developers, outlining the recommended methods and factors to take into account when creating products with the help of large language models (LLMs). It encompasses advice for all phases of the development process, from the initial conceptual stage to the final deployment, all in a bid to ensure responsible usage.

- Fine-tuning Llama 2: Fine-tuning helps improve model performance by training on specific examples of prompts and desired responses. I really like the LLM Engine open-source library released by Scale. Fine-tuning is as simple as:

from llmengine import FineTune

response = FineTune.create(

model="llama-2-7b",

training_file="s3://my-bucket/path/to/training-file.csv",

)

print(response.json())

from llmengine import Completion

response = Completion.create(

model="llama-2-7b.airlines.2023-07-17-08-30-45",

prompt="Do you offer in-flight Wi-fi?",

max_new_tokens=100,

temperature=0.2,

)

print(response.json())

Participate in the Llama Impact Challenge: Meta wants to activate the community of innovators who aspire to use Llama to solve hard problems. They launched a challenge to encourage a diverse set of public, non-profit, and for-profit entities to use Llama 2 to address environmental, education, and other important challenges. The challenge will be subject to rules which will be posted here prior to the challenge starting in a few weeks. Here's the link: https://ai.meta.com/llama/llama-impact-challenge/starts

Conclusion

In conclusion, the emergence of Meta's Llama-2 is significant in the world of AI. As pointed out by Stanford's Center for Research on Foundation Models director, Percy Liang, Llama-2 could pose a substantial challenge to OpenAI. Although it may not outperform GPT-4 in all areas at present, its potential for enhancement cannot be overlooked. Should Llama-2 emerge as the leading open-source alternative to OpenAI, as predicted by UC Berkeley's Professor Steve Weber, it would indeed be a considerable victory for Meta.

The AI landscape is ever-evolving, and the introduction of Llama 2 certainly adds a fascinating new dynamic to the field.