Foundation models are the next generation of AI models that are trained on massive amounts of unlabeled data. Training a foundation model from scratch is a complex and challenging task. It requires a significant amount of data, computing resources, and expertise.

Only a few large tech firms and very well funded startups currently have the ability to train large language models, so most companies must rely on them for access to this technology.

Before we start, let me make it clear that I do not recommend most businesses train their own foundation models from scratch. Instead, I recommend using some customization techniques, such as prompt tuning or fine-tuning, to adapt a pre-trained foundation model to your specific use case.

TODAY IN 10 MINUTES OR LESS, YOU'LL LEARN:

✔️ Why you should consider training your own Foundation Model

✔️ Challenges of Training a Foundation Model from Scratch

✔️ Steps to Train a Foundation Model

✔️ Cost implications training a Foundation Model from Scratch

✔️ Tips for Businesses Considering Training a Foundation Model

✔️ My Final Recommendation

Let's dive into it 🤿

Why you should consider training your own Foundation Model

There are a few reasons why you might consider training your own foundation model.

- Control over the model: When you train your own model, you have full control over the data it is trained on and the parameters that are used to train it. This means that you can tailor the model to your specific needs and requirements. For example, you can train a model to generate text in a specific style or to answer questions in a specific domain.

- Improved performance: Foundation models that are trained on a large and diverse dataset can achieve state-of-the-art performance on a variety of tasks. If you have access to a large dataset that is relevant to your domain, you can train a model that will outperform pre-trained models.

- Customization: Foundation models can be customized to meet specific needs.

In addition, you may want to build your own LLM if you need to change the model architecture or training dataset from existing pre-trained LLMs. For example, if you want to use a different tokenizer, change the vocabulary size, or change the number of hidden dimensions, attention heads, or layers.

Typically, in this case, the LLM is a core part of your business strategy & technological moat.

You are taking on some or a lot of innovations in LLM training, and have a large investment appetite to train and maintain expensive models on an ongoing basis. Typically, you have or will have lots of proprietary data generated associated with the LLM service to create a continuous model improvement loop for sustainable competitive advantage.

Challenges of Training a Foundation Model from Scratch

Training a foundation model from scratch is a challenging task. These are the main considerations:

- Data: The first challenge is to obtain a large enough dataset. This data can be collected from a variety of sources, such as the Internet, social media, and customer interactions. An example of this is The Pile. It is dataset is a large, diverse, open-source language modeling dataset that was constructed by EleutherAI in 2020 and made publicly available on December 31 of that year. It is composed of 22 smaller datasets, including 14 new ones.

- Compute Resources: Training a foundation model requires a significant amount of computing resources. This is because the models are trained on large datasets using deep learning algorithms. An example is what IBM Research has built, a new AI supercomputer called Vela, which has thousands of Nvidia A100 GPUs.

- Expertise: Training a foundation model requires expertise in machine learning and AI. This is because there are many factors that need to be considered, such as the choice of model architecture, the hyperparameters, and the training process. Those skills nowadays are scarse and very expensive.

🚀 OpenAI is setting new industry standards with its engineers earning an average annual salary of $925,000! This includes a base salary of $300,000 and a whopping $625,000 in stock-based compensation. Some even earn as much as $1.4 million! 💰 #AI #OpenAI #TechNews

— MLYearning.org (@mlyearning) June 27, 2023

Read here…

Steps to Train a Foundation Model

Here are the steps to train a foundation model:

- Collect a dataset. The foundation model will need to be trained on a very large dataset, for example, text or code. The dataset should be as diverse as possible, and it should cover the tasks that you want the model to be able to perform.

- Prepare the dataset. The dataset will need to be prepared before it can be used to train the model. This includes cleaning the data, removing any errors, and formatting the data in a way that the model can understand.

- Tokenization is the process of breaking down text into individual tokens. This is necessary for foundation models, as they need to be able to understand the individual words and phrases in the text.

- Configure the training process. You will need to configure the training process to specify the hyperparameters, the training algorithm architecture, and the computational resources that will be used.

- Train the model. The model will be trained on the dataset using the training model architecture that you specified. This can take a long time, depending on the size of the model and the amount of data.

- Evaluate the model. Once the model is trained, you will need to evaluate its performance on a held-out dataset. This will help you to determine whether the model is performing as expected.

- Deploy the model. Once you are satisfied with the model's performance, you can deploy it to production. This means making the model available to users so that they can use it to perform tasks. Deployment of LLMs is also compute intensive.

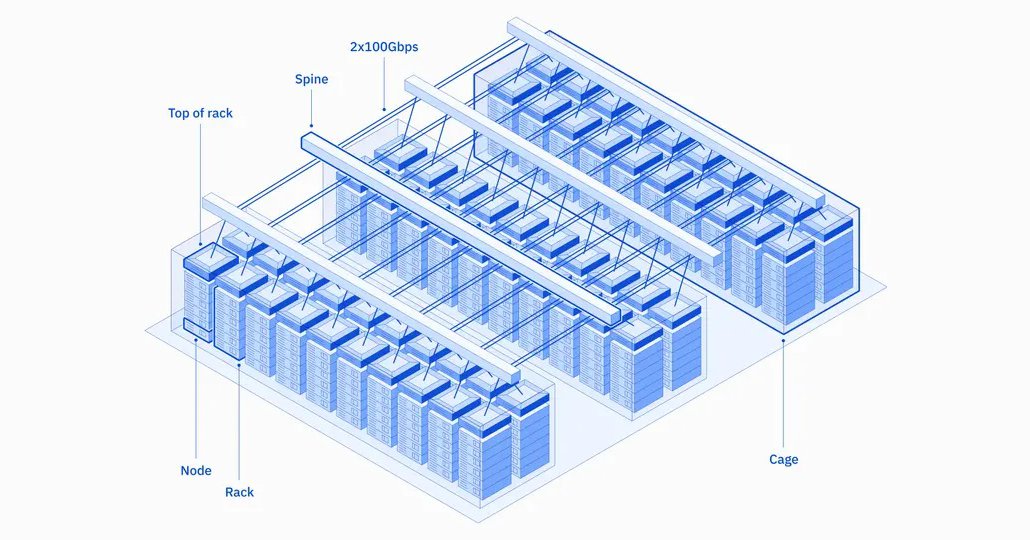

Here's a more detailed diagram from the Replit Engineering team:

Cost implications training a Foundation Model from Scratch

The cost of training a foundation model depends on a number of factors. As a general rule of thumb, training a foundation model can cost anywhere from tens of thousands to millions of dollars.

Here are some factors that can affect the cost of training a foundation model:

- The size of the model: The larger the model, the more data and computational resources it will require to train.

- The amount of data: The more data that is used to train the model, the more accurate it will be. However, more data also means more cost.

- The computational resources: The more powerful the computational resources, the faster the model will train. However, more powerful resources also mean more cost.

Here are some examples of the cost of training foundation models:

- Facebook's 65B LLaMA trained for 21 days on 2048 Nvidia A100 GPUs. At $3.93/hr on GCP, that's a total of ~$4M.

- Google's 540B PaLM was trained on 6144 v4 TPUs for 1200hrs. At $3.22/hr is a total of ~$27M

- PaLM actually trains on 6144 TPUs for 1200hrs and then 3072 TPUs for 336hrs — (3.22*6144*1200) + (3.22*3072*336) = ~$27m.

These estimates do not account for the time spent developing the model, experimenting with different approaches, or optimizing the hyperparameters. Additionally, if you are able to negotiate a discount with a cloud provider, you could save 50-80% of your costs, bringing the total down to $800k-$2m. It's also worth noting that Big Tech companies and well-funded startups likely did not train their model on public cloud providers, so their costs may have been lower if they owned the GPUs themselves.

The high cost of training LLM models can be prohibitive for many organizations, making their implementation financially unattainable.

Tips for Businesses Considering Training a Foundation Model

Here are some tips for businesses considering training a foundation model:

- Consider your needs: Before you start training a foundation model, it is important to consider your needs. What tasks do you want the model to be able to perform?

- Understand the cost: As you saw in the previous section, training a foundation model can be very expensive, so it is important to understand the cost before you start.

- Consider using a pre-trained model: If you do not have the resources to train a foundation model from scratch, you can consider using a pre-trained model. Pre-trained models are already trained on a large dataset, so you can use them to perform a variety of tasks and customize them.

- Work with a team of experts: Training a foundation model is a complex task, so it is important to work with a team of AI experts.

Conclusion and recommendation for Business:

Training a foundation model from scratch is a complex and challenging task, but it can be a valuable investment for businesses that are looking to gain a competitive advantage.

There is no one-size-fits-all answer to the question of whether to purchase or train an LLM. The best decision for you will depend on your specific needs and resources and company strategy.

My recommendation: customize a foundation model

When you customize a foundation model, you start with a pre-trained model that has already been trained on a massive dataset. This means that you can save a lot of time and money, as you do not need to collect your own dataset or train the model from scratch.

You can also tailor the model to your specific needs by fine-tuning or other techniques such as Parameter Efficient Fine Tuning (PEFT) with your own data.

Customizing foundation models is a great way to get the most out of these powerful tools. It is less expensive, faster, and can give you better performance than training a model from scratch.

Nvidia is making so much money with AI because its GPUs are used to train and run AI models. GPUs are much faster than CPUs for these tasks, and Nvidia has a strong ecosystem of partners that help to drive demand for its products and services. Additionally, the AI market is growing rapidly, which is creating a large addressable market for Nvidia's products and services.

Nvidia has been successfully selling shovels in all major gold rushes (dot com, cloud computing, machine learning, digital assets & blockchain, metaverse, and now AI).

"During a Gold Rush, sell Shovels" - Mark Twain.