The landscape of AI is continuously evolving, and the latest breakthrough in AI technology finally going mainstream is Multimodal AI Models. These models can transform the way businesses operate, making them more efficient and opening up new possibilities. In this blog post, we'll demystify multimodal AI models, explain their significance in the business world, and delve into the fascinating realm of GPT-4, a leading multimodal AI model by OpenAI.

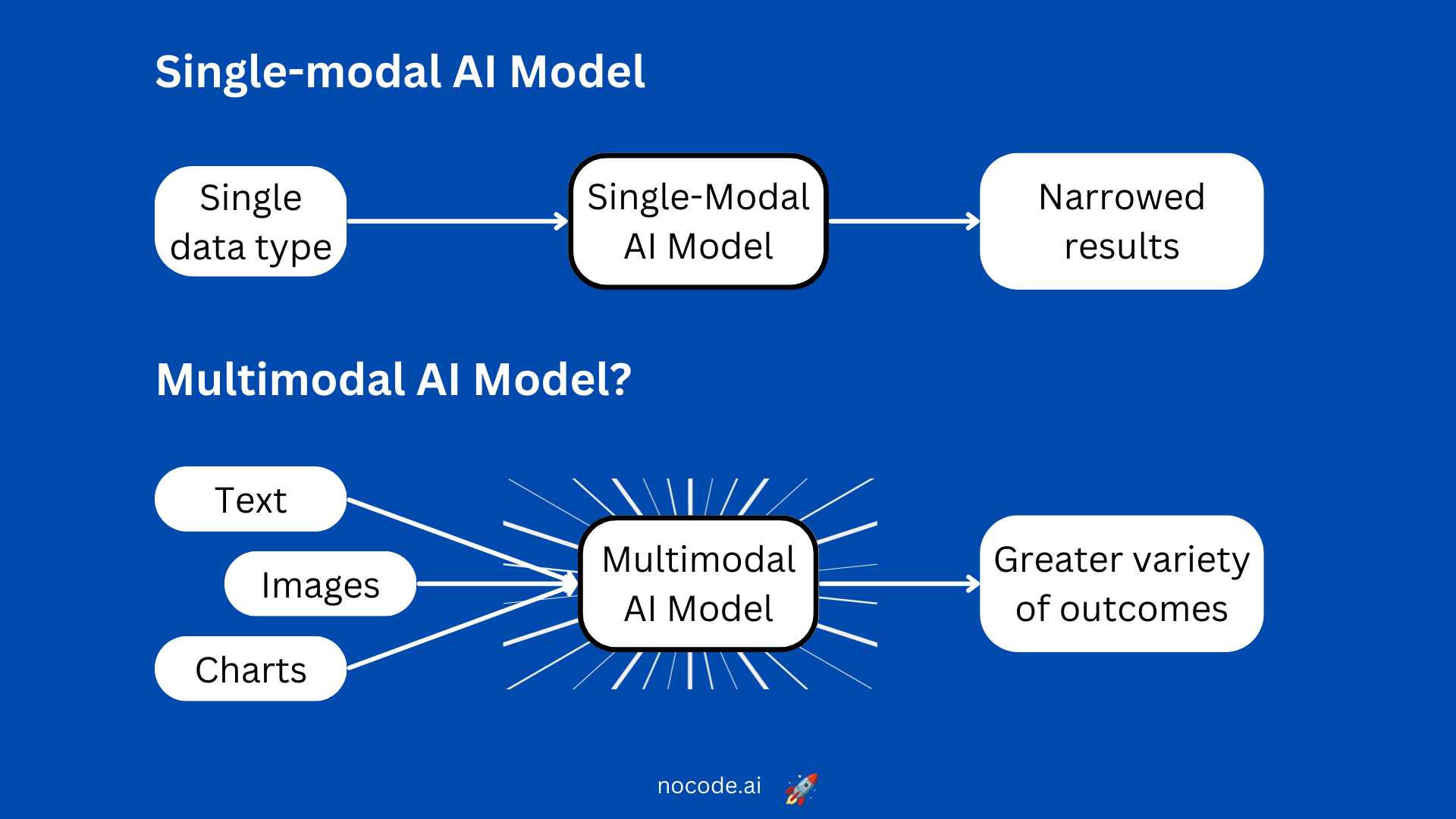

What are Multimodal AI Models?

Multimodal AI models are advanced AI systems capable of understanding and generating information from multiple data modalities or sources, such as text, images, audio, and video. Unlike traditional AI models, which are limited to processing only one type of data, multimodal models can analyze and generate insights from various data types, creating a more comprehensive understanding of the input data.

Why are Multimodal AI Models a Big Innovation for Business?

- Enhanced Decision-Making: Multimodal AI models allow businesses to make better-informed decisions by analyzing data from multiple sources. This comprehensive analysis results in more accurate predictions and insights, leading to improved decision-making.

- Streamlined Workflows: By processing and interpreting multiple data types simultaneously, multimodal AI models can simplify and automate complex workflows, saving time and resources.

- Improved Customer Experience: Multimodal AI models can provide personalized customer experiences by analyzing customer behavior through various channels like text, images, and video. This enables businesses to offer tailored products and services, enhancing customer satisfaction.

- New Business Opportunities: The versatility of multimodal AI models opens up new business opportunities by enabling innovative applications and services that weren't possible with traditional AI models.

GPT-4: A Multimodal AI Model Powerhouse

OpenAI's GPT-4 (short for Generative Pre-trained Transformer 4) is a state-of-the-art multimodal AI model that has been making waves in the AI community since it was announced a few days ago. Building on the success of its predecessor, GPT-3, GPT-4 has been designed to understand and generate human-like text, as well as process and interpret images, audio, and video data.

How GPT-4 Works

GPT-4, like other transformer models, works on the principle of self-attention mechanisms. It learns patterns and relationships within the input data, allowing it to generate contextually relevant outputs. The model is pre-trained on a massive dataset containing text and images from various sources, including websites, books, and articles. This extensive pre-training enables GPT-4 to gain a broad understanding of language and contextual information, making it highly versatile and powerful.

GPT-4's Multimodal Capabilities in Action

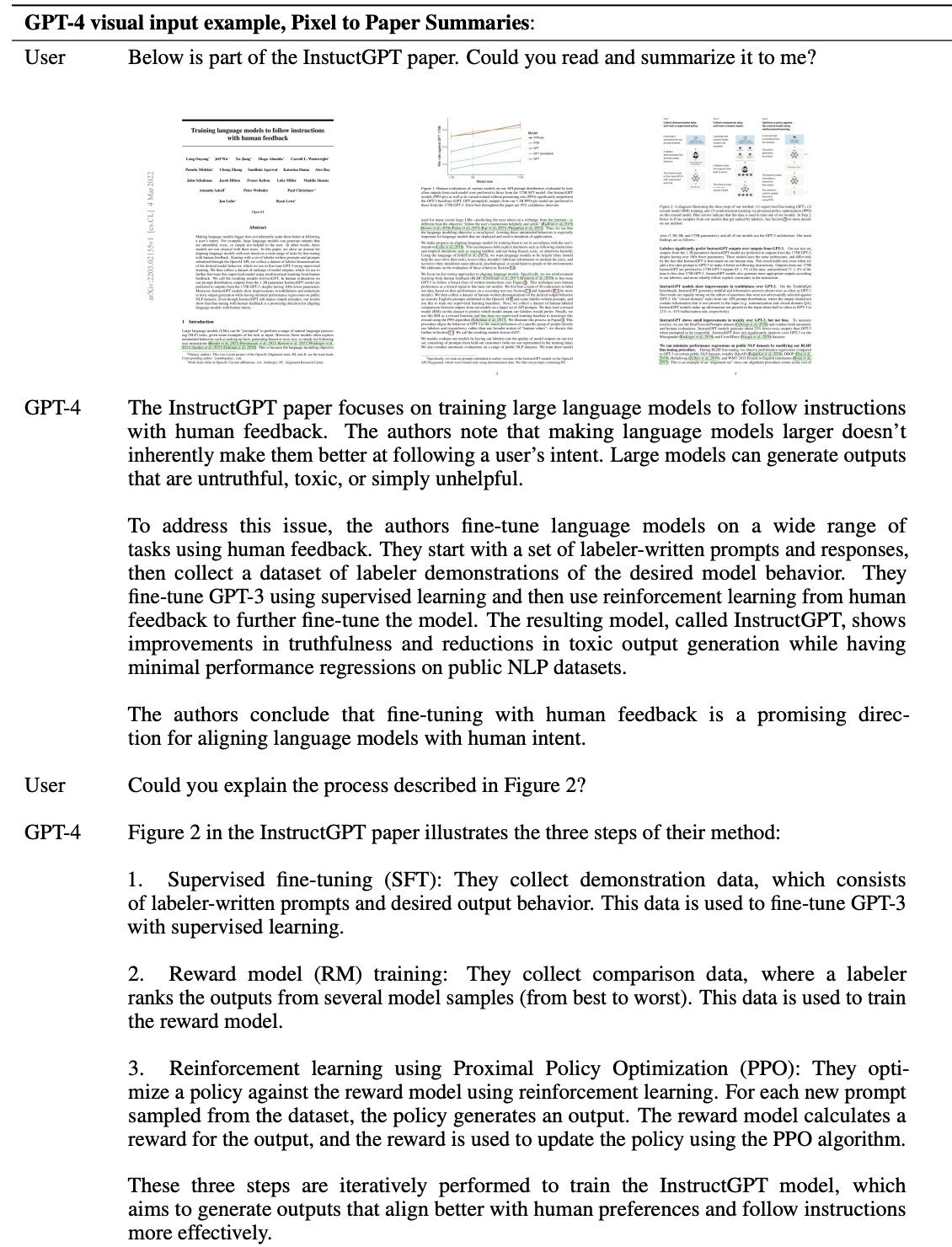

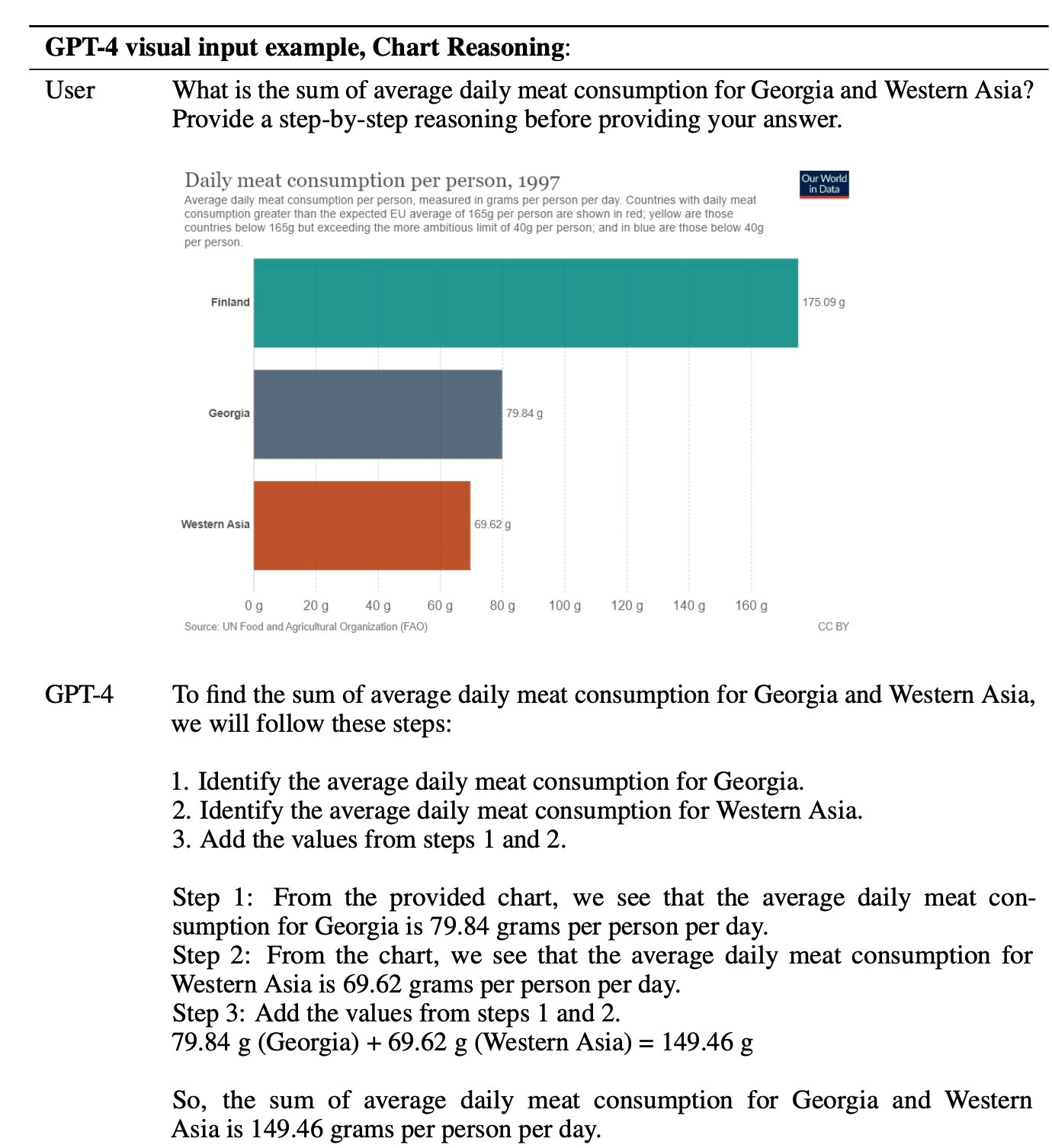

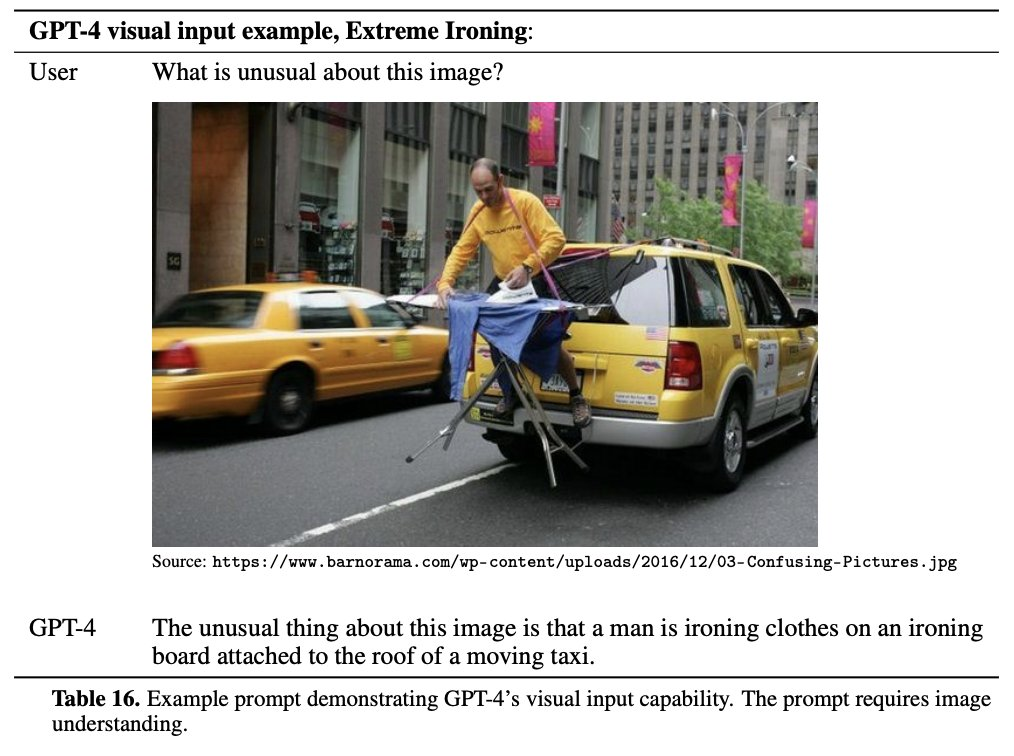

Let's dive into the fascinating world of multimodal capabilities in action! See below some examples of how this cutting-edge AI technology seamlessly combines text, images, and other data types to deliver remarkable results. From recognizing unusual patterns in images to comprehending complex mathematical and physical diagrams, GPT-4 pushes the boundaries of what's possible.

- A visual assistant:

We are thrilled to present Virtual Volunteer™, a digital visual assistant powered by @OpenAI’s GPT-4 language model. Virtual Volunteer will answer any question about an image and provide instantaneous visual assistance in real-time within the app. #Accessibility #Inclusion #CSUN pic.twitter.com/IxDCVfriGX

— Be My Eyes (@BeMyEyes) March 14, 2023

- Comprehension of schematics:

- Drug Discovery:

GPT-4 does drug discovery.

— Dan Shipper 📧 (@danshipper) March 14, 2023

Give it a currently available drug and it can:

- Find compounds with similar properties

- Modify them to make sure they're not patented

- Purchase them from a supplier (even including sending an email with a purchase order) pic.twitter.com/sWB8HApfgP

- Understanding graphs:

- Identify anomalies within a picture:

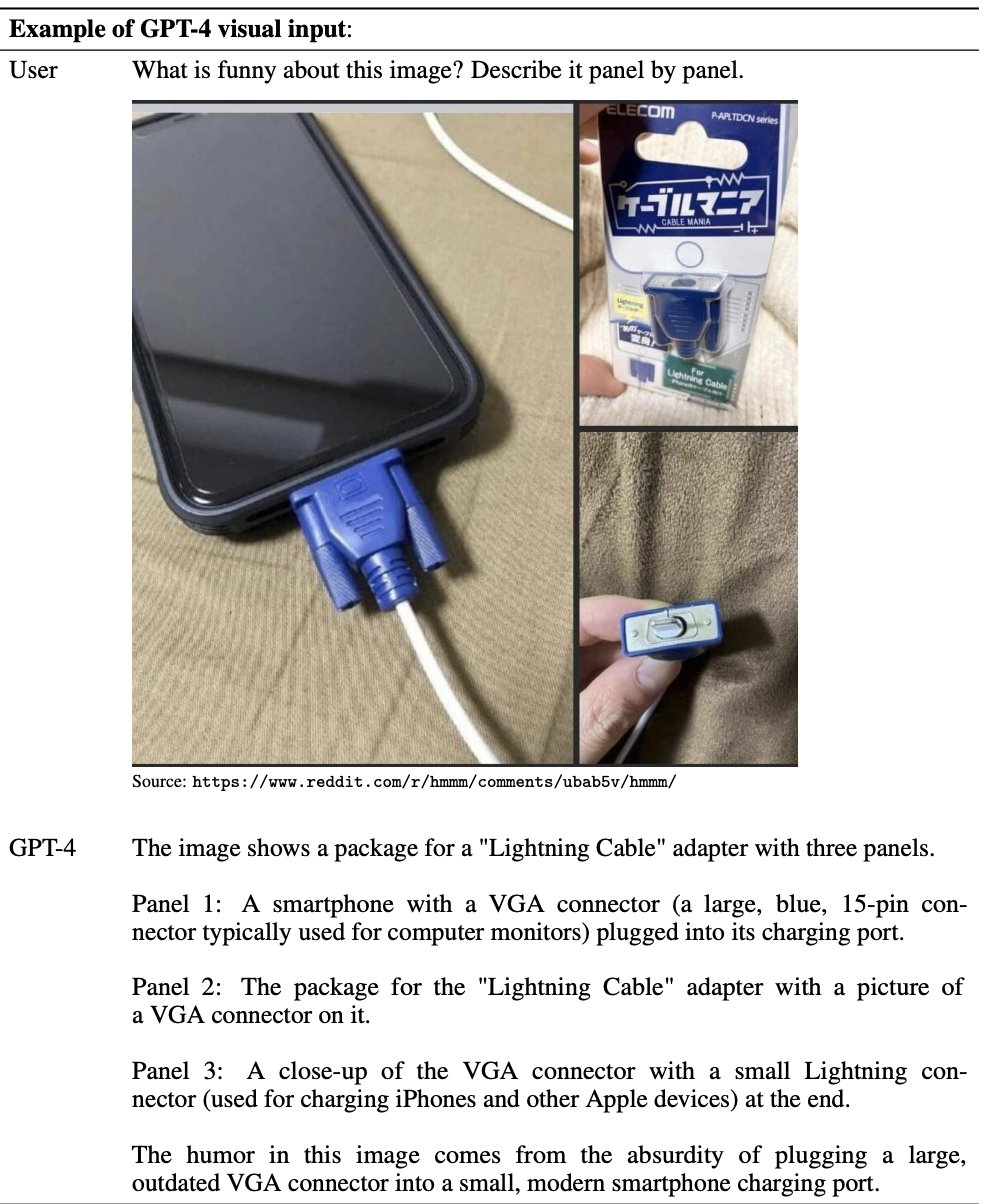

- Understanding funny elements in pictures:

- Turn your napkin sketch into a working web application:

gpt-4 can turn your napkin sketch into a web app, instantly.

— Siqi Chen (@blader) March 14, 2023

we are deep into uncharted territory here.pic.twitter.com/V5HtYHgS6u

- GPT-4 for iOS app development:

hey gpt4, make me an iPhone app that recommends 5 new movies every day + trailers + where to watch.

— Morten Just (@mortenjust) March 15, 2023

My ambitions grew as we went along 👇 pic.twitter.com/oPUzT5Bjzi

To sum up

In a nutshell, multimodal AI models like GPT-4 are reshaping the AI landscape and unlocking new opportunities for businesses across diverse sectors. By leveraging their ability to process and analyze multiple data types, businesses can enhance decision-making, streamline workflows, and deliver personalized customer experiences. As GPT-4 continues to push the boundaries of AI capabilities, it paves the way for a future where AI-driven innovations will play an even more significant role in driving business success. Stay ahead of the curve by embracing the power of multimodal AI models and exploring the immense possibilities they offer.