It's time to understand how we've reached today's AI hype and what differentiates the new type of AI from traditional techniques.

TODAY IN 5 MINUTES OR LESS, YOU'LL LEARN:

- How Traditional AI Models Work

- How Foundation Models Work

- Applications of Foundation Models

- Generative AI

- Advantages of foundation models

- Disadvantages of foundation models

Let's dive into it 🤿

AI has come a long way in the past few years. In the early days, AI systems were fairly limited, focusing on narrow tasks like playing chess or solving math problems. These early systems relied on hand-coded rules and logic designed by human experts. While groundbreaking at the time, they could only perform the specific tasks they were programmed for.

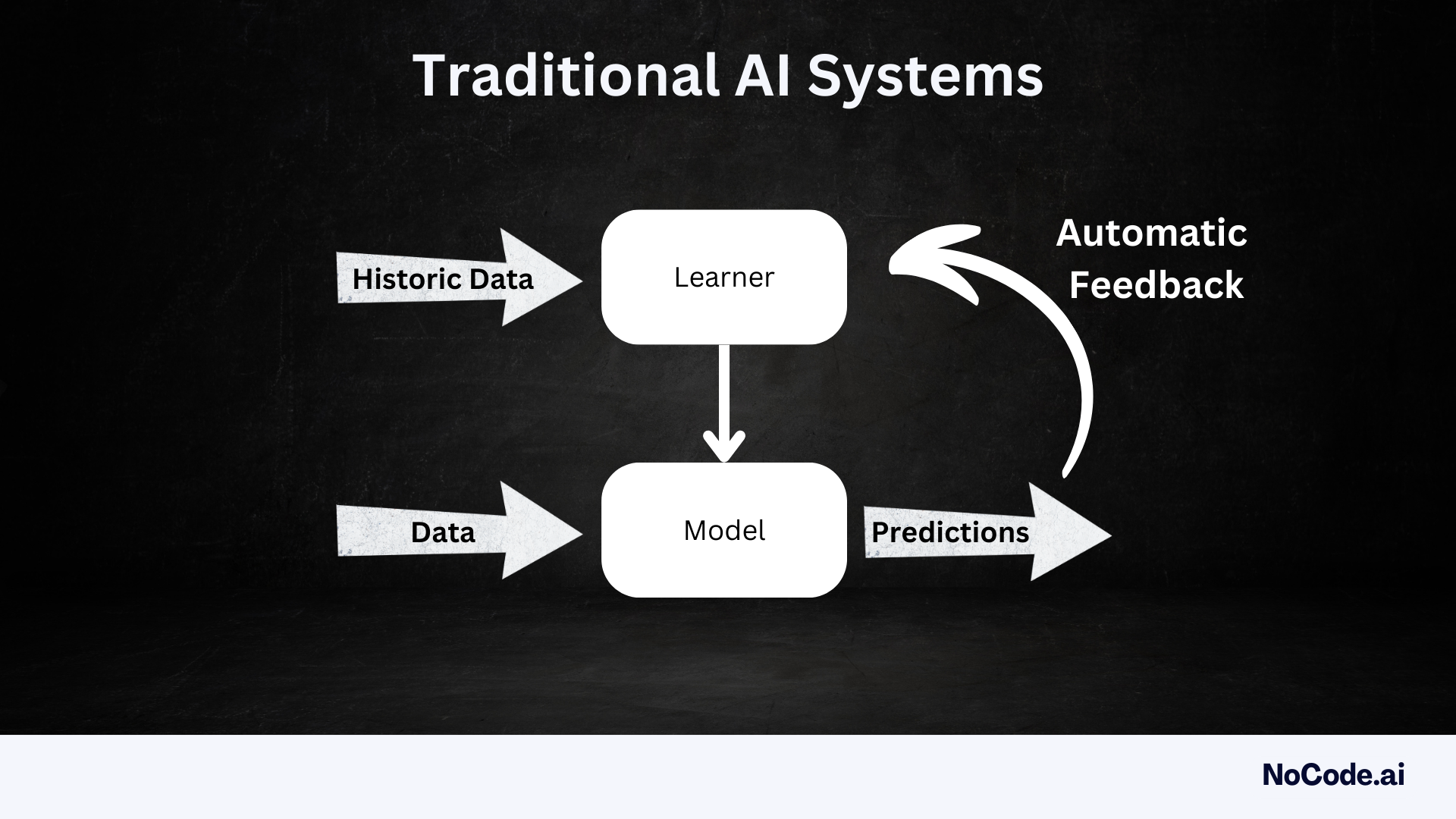

In recent years, a new paradigm in AI has emerged: foundation models. Unlike traditional AI, foundation models learn from massive datasets encompassing a broad scope of knowledge across different domains. Through self-supervised learning techniques like predicting masked words in sentences, foundation models acquire general intelligence and understanding of the world, without the need for explicit task-specific programming.

Two of the most prominent foundation models today are OpenAI's GPT-4 and Google's PaLM. Their large number of parameters allows them to generate remarkably human-like text on virtually any topic. Meanwhile, PaLM has an astonishing 540 billion parameters, enabling complex chains of multi-step reasoning.

How Traditional AI Models Work

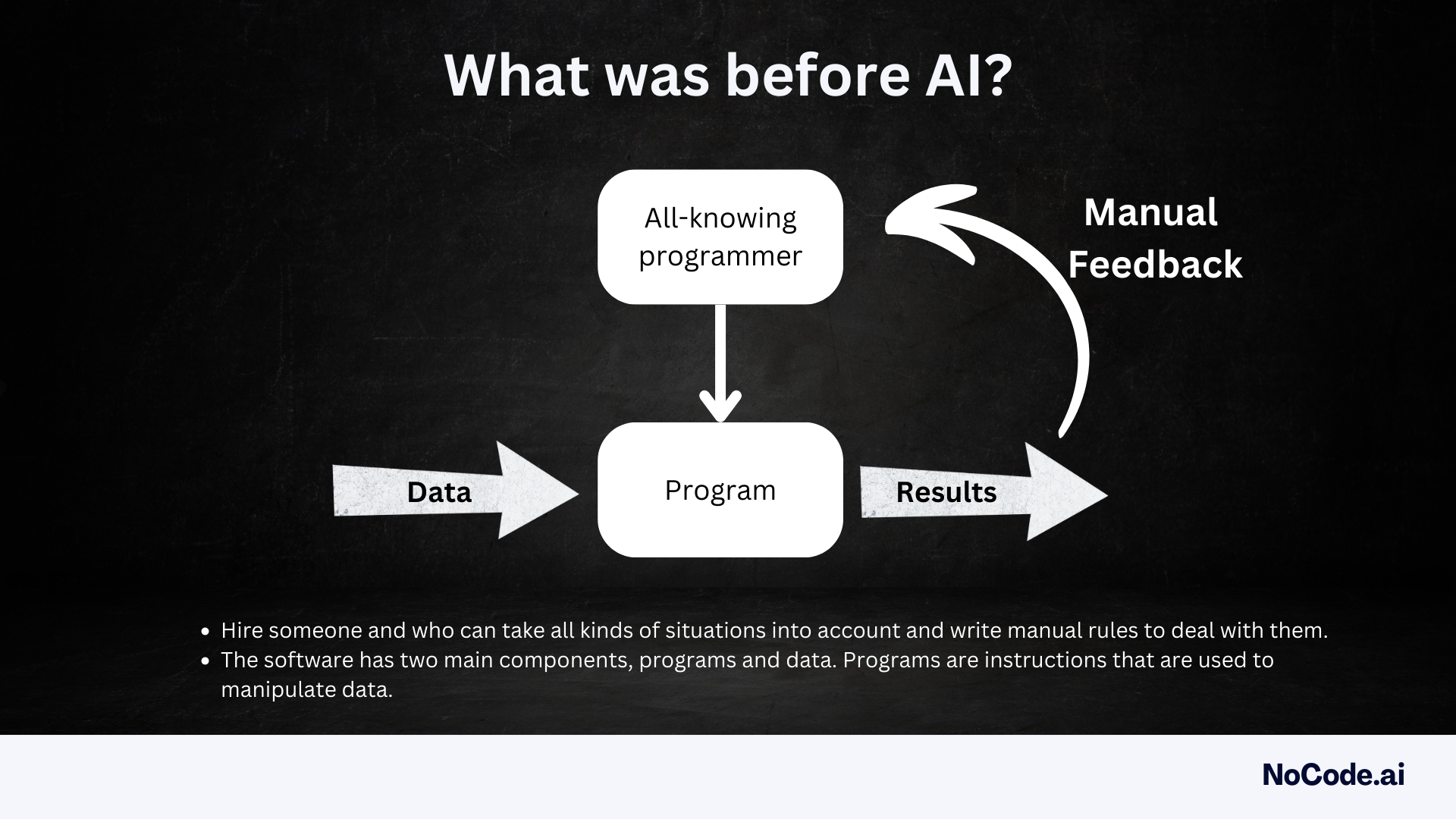

Before the rise of foundation models, most AI systems were built using more traditional techniques. These traditional AI models relied on explicit human engineering and rules, rather than learning directly from data.

For example, early game-playing systems like chess AIs used hand-coded logic with human expert strategies. To accomplish specific tasks, programmers had to outline detailed instructions telling the AI exactly what to do in different situations.

Traditional AI models are typically trained on small datasets of labeled data. This means that they are only able to perform the task that they were trained on. For example, a model that is trained to classify images of cats and dogs will only be able to classify images of cats and dogs.

Other traditional techniques included decision trees, logistic regression, and support vector machines. These models focused on narrow domains and required carefully-labeled training data relevant to their specific task.

Unlike modern neural networks, traditional models processed inputs feature-by-feature. So to identify images, traditional computer vision systems might look for low-level features like edges and shapes. This differed from deep learning models which take a more holistic view.

Overall, traditional AI models had limited flexibility - they could only perform the niche tasks they were designed and trained for.

They relied heavily on human guidance, lacking the general intelligence of modern foundation models trained on massive datasets.

How Foundation Models Work

Foundation models are typically built using a specific kind of neural network architecture, called a transformer. The transformer helps the foundation model understand unlabeled data and turn an input into an output.

Foundation models are trained on massive datasets of unlabeled data. This allows them to learn the underlying patterns of the data, and to generalize to new tasks. For example, a foundation model that is trained on a dataset of text and code will be able to generate text, translate languages, and write different kinds of creative content.

Transformers allow models to look at an entire sequence, like a sentence, at once to understand the full context and meaning. This is done through an attention mechanism that focuses on the most relevant parts of the input. Transformers were a breakthrough in natural language processing and are the key enabler of large language models.

At their core, foundation models are trained on massive text datasets using self-supervised learning. This allows them to learn relationships between words, concepts, and language structure from patterns in the data. With enough data and computing power, foundation models gain extremely versatile capabilities.

Applications of Foundation Models

Unlike traditional narrow AI systems, foundation models exhibit impressive few-shot and zero-shot learning abilities. This means they can quickly learn new skills with just a few examples or instructions, without extensive retraining.

For instance, GPT-4 can generate coherent essays, product descriptions, news articles, poetry, and even programming code when simply prompted with just a few examples or lines of context. The applications span countless domains and industries.

Some areas where foundation models are being applied:

- Enhancing search engines and recommendation systems

- Automating customer support through conversational agents

- Generating creative content like stories, poems, or imagery

- Producing computer code from natural language descriptions

Generative AI

One of the most promising applications of foundation models is Generative AI. Generative AI refers to algorithms that can automatically generate new outputs like text, images, audio, or code.

ChatGPT is one example of a generative AI system built on a foundation model. It can engage in remarkably human-like conversation and provide detailed, high-quality responses to natural language prompts on nearly any topic.

The possibilities for generative AI are vast, from helping content creators to aiding developers in writing software. However, there are challenges around bias, safety, and responsible development that must be addressed as these systems become more capable.

Advantages of foundation models

There are several advantages to using foundation models over traditional AI models. First, foundation models are more general-purpose. This means that they can be used for a wider range of tasks, which can save time and money. Second, foundation models are more robust to changes in the data. This is because they have learned the underlying patterns of the data, rather than just memorizing specific examples. Key advantages:

- Lower upfront costs through less labeling

- Faster deployment through fine-tuning and inferencing

- Equal or better accuracy for multiple use cases

- Incremental revenue. through better performance

- Lower technical barriers to entry. Faster ramp-up for organizations.

The benefits of foundation models come from removing the need for task-specific engineering. By training generally, on broad data, foundation models acquire versatile capabilities and intuition that allow them to adapt as needed for different problems. This represents a major evolution in AI and unlocked new possibilities like few-shot learning.

Disadvantages of foundation models

There are also some disadvantages to using foundation models. First, they require a lot of data to train. This can be a challenge, especially for small businesses or organizations. Second, foundation models can be computationally expensive to train and deploy. If you want to learn more about what it takes to train a foundation model, check my issues here:

Conclusion

Foundation models are a promising new approach to AI. They offer several advantages over traditional AI models, such as their general-purpose nature and their robustness to changes in the data. However, they also have some disadvantages, such as the need for a lot of data to train and the computational expense.

The choice between traditional AI models and foundation models largely depends on the specific requirements of the task at hand. While traditional models offer transparency and are suitable for tasks with well-defined rules, foundation models excel at complex tasks requiring pattern recognition and nuanced understanding.

Ultimately, the future of AI may likely involve a combination of both: leveraging the strengths of foundation models in pattern recognition and complex problem-solving while incorporating elements of traditional AI models to improve transparency and reduce reliance on vast amounts of data and computational power.

As AI continues to evolve, it is important we understand these models, their strengths, and their limitations. Such understanding allows us to harness their capabilities effectively while addressing the ethical, societal, and environmental implications they present.