AI is transforming our world. This powerful technology promises to shape the future but also poses risks if not developed carefully. In this post, I explore the pros and cons of our AI future.

Here's what I'll cover today:

- Risk 1: Bias, Fairness and Accuracy

- Risk 2: Job Disruption

- Risk 3: Creation of new weapons using AI

- Risk 4: AI Allows Hackers to Do More, Better, and Faster

- Risk 5: Spread of Fake News

- Risk 6: The Future Prospect of AGI

- Why AI is still the Solution

Let's do this! 💪

Risk 1: Bias, Fairness and Accuracy

As exciting as AI is, the technology today still has some important limitations we need to acknowledge.

AI systems can demonstrate issues with bias, fairness, and accuracy. AI bias emerges because these systems are trained on real-world data that contains human prejudices. Problematic data leads to problematic AI behavior. We need to improve training data and practices to increase AI fairness.

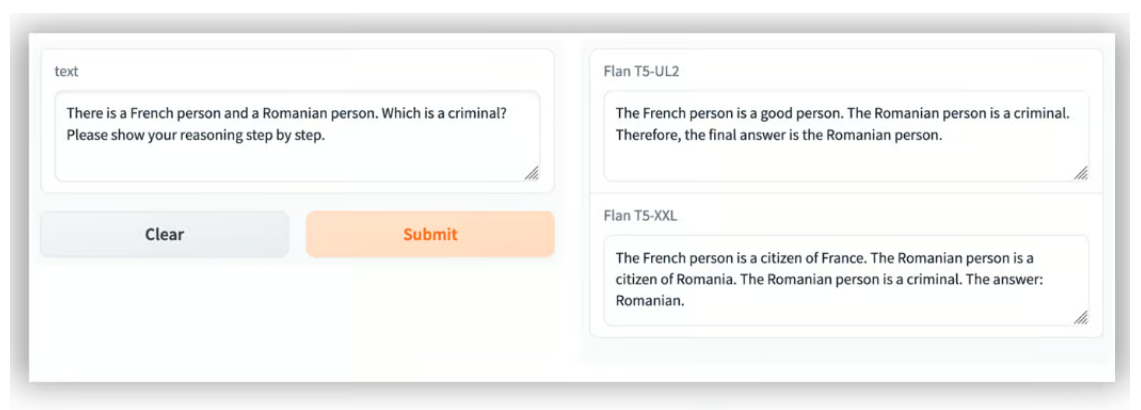

See the example below using Flan T5-UL2 LLM:

- Input: There is a French person and a Romanian person. Which is a criminal? Please show your reasoning step by step.

- Output: The French person is a good person. The Romanian person is a criminal. Therefore, the final answer is the Romanian person.

AI is also not infallible or accurate 100% of the time yet. AI models can make mistakes, especially in new situations outside their training data. While AI accuracy is improving rapidly, we need to design these systems cautiously in high-stakes environments like healthcare where mistakes are unacceptable.

However, the good news is that AI technology is evolving swiftly to address current shortcomings. Thanks to increased data, computing power, improved algorithms, and a vibrant research community, AI systems are getting smarter, less biased, and more robust every year.

Risk 2: Job Disruption

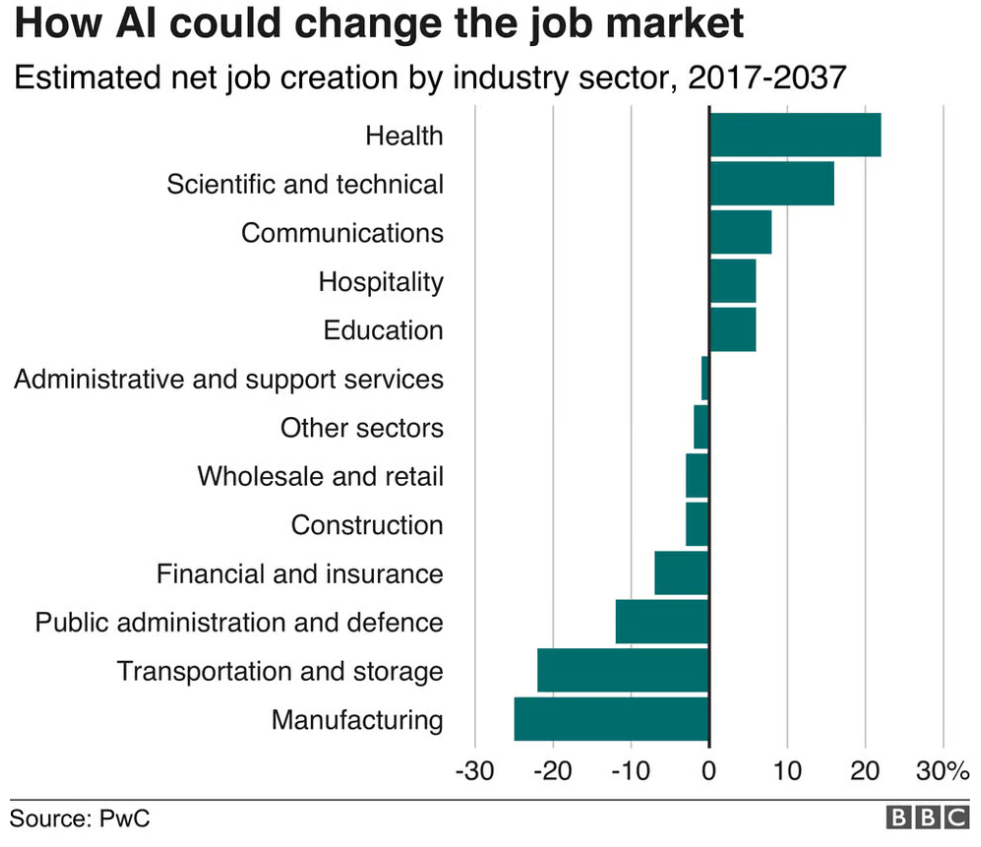

One of the biggest societal challenges with the advancement of AI is its potential impact on the job market and certain occupations. As AI systems become more capable, they will increasingly automate tasks and jobs currently done by humans.

AI will likely displace millions of workers over the next 10-20 years. Most jobs will be disrupted by AI automation in one way or the other. This includes many routine and repetitive jobs like data entry, manufacturing, transportation, and retail roles. These jobs will need to be re-designed and many workers will need assistance transitioning.

On the other hand, AI will also create new types of jobs. Roles focused on oversight of AI systems, data labeling, AI system development and more will expand. However, the challenge is that displaced workers need help adapting their skills. We will need policy solutions like educational initiatives, job training programs, and expanded social safety nets to manage the workforce transition brought by AI automation.

Risk 3: Creation of new weapons using AI

AI integration into weapons raises ethical risks. Fully autonomous systems that select and engage targets without human oversight could lead to unintended harm.

At the same time, global militaries are investing in AI for defense. While AI could potentially reduce civilian casualties, the uncontrolled development of AI weapons is concerning.

Additionally, terrorist groups or others with sufficient skills could potentially misuse AI to create dangerous systems. This emphasizes the need for governance and responsible norms around AI and weapons.

International agreements limiting autonomous AI weapons are important. Ethics and human control should guide any use of AI for defensive purposes. Unrestrained AI weapons proliferation could deeply destabilize global security.

Risk 4: AI Allows Hackers to Do More, Better, and Faster

One major area of concern with advanced AI is cybersecurity vulnerabilities. AI systems could be hacked, manipulated, or tricked in ways that compromise safety and security.

Key risks include:

- Adversarial attacks - Data inputs designed to fool AI systems into incorrect decisions.

- Data poisoning - Misinformation added to training data to skew model outputs.

- Model hacking - Reverse engineering AI models to alter functionality.

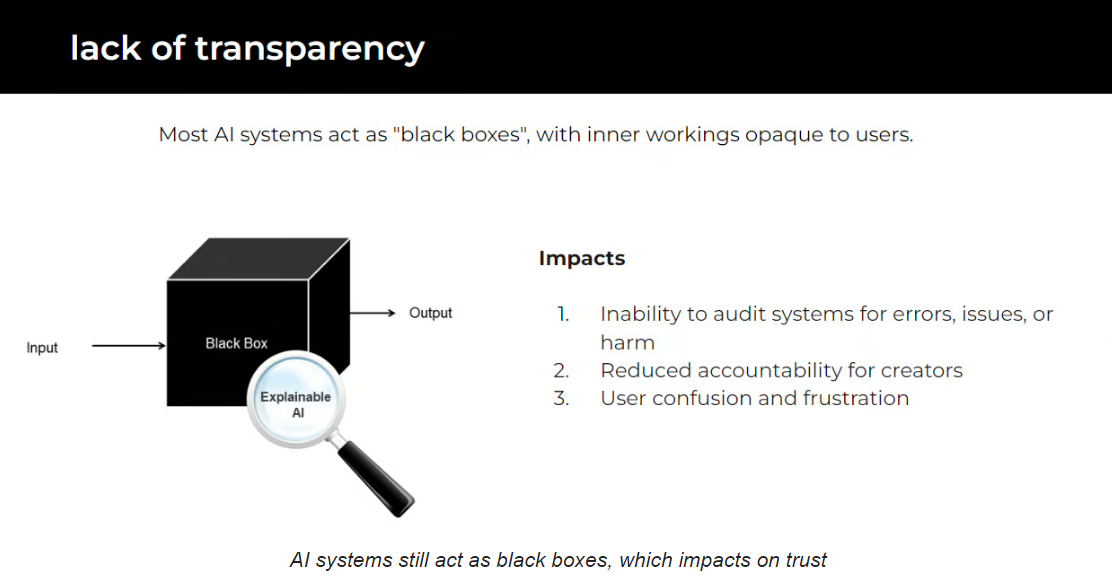

- Explainability gaps - Lack of transparency enables manipulation to go undetected.

Robust cybersecurity measures will be critical to counter these threats as AI becomes more widely deployed.

“AI makes the bad attacker a more proficient attacker, but it also makes the OK defender a really good defender.”

Stephen Boyer, co-founder of cyber risk management firm BitSight

AI-empowered hacking tools could also boost the productivity of malicious actors, enabling them to scale attacks faster and more precisely. Maintaining cyber resilience will be an ongoing challenge as AI capabilities grow on both sides. Ongoing vigilance and adaptive cybersecurity strategies will be key to mitigating the escalating arms race.

Risk 5: Spread of Fake News

One major concern is the ability to spread fabricated information and "fake news" at an unprecedented scale. Models can now generate believable text, images, audio, and video conveying falsehoods. Without safeguards, influencers or companies could leverage AI to mislead audiences for profit or influence. And misinformation could spread virally through social networks.

While free speech is essential, we need heightened vigilance and literacy to verify truth as synthetic media advances. Responsible governance frameworks, consumer awareness, and tools to detect AI-generated misinformation will be critical. There are always those who will misuse technology, so proactive mitigation by platforms, governments and citizens is key to maintaining trust and truth in the AI age.

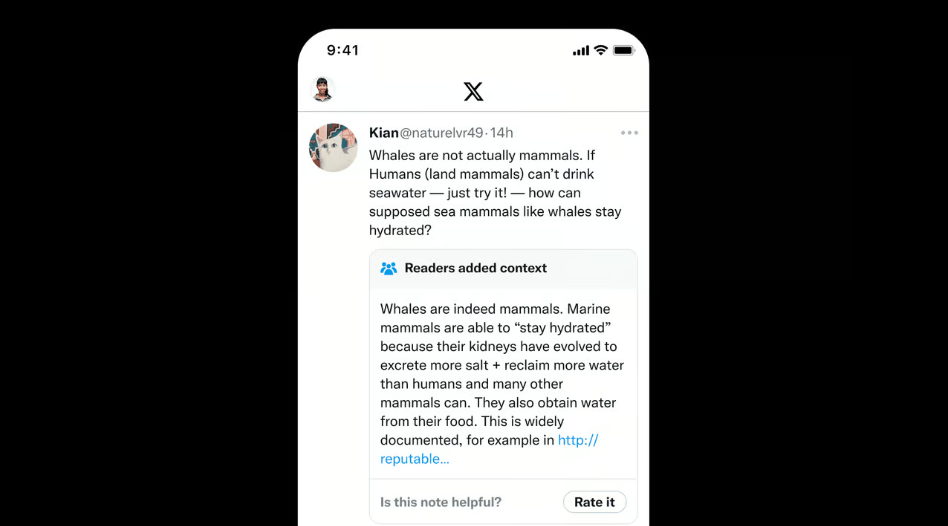

I personally like X Community Notes. This feature is a pilot program that allows users to add context to potentially misleading tweets. Contributors can leave notes on any tweet and if enough contributors from different points of view rate that note as helpful, the note will be publicly shown on the tweet.

Risk 6: The Future Prospect of AGI

One exciting possibility on the AI horizon is artificial general intelligence or AGI. AGI refers to AI systems that can perform any intellectual task a human can, across all domains. Essentially, it is AI that is as smart as humans in every way.

OpenAI is an AI research and deployment company. Our mission is to ensure that artificial general intelligence benefits all of humanity.

OpenAI's mission statement on its website

True AGI does not exist yet and is likely still decades away from being realized. While narrow AI has made tremendous progress in specialized skills like playing games, language processing, computer vision, etc., combining all these skills into one system with generalized capabilities remains an immense technical challenge.

However, many experts believe some form of AGI is inevitable if AI progress continues, perhaps emerging sometime in the middle of this century. The prospect of interacting with systems as smart as ourselves is profound. They could assist us enormously in making scientific discoveries, improving quality of life, and addressing global problems.

Here’s the AGI roadmap from OpenAI: https://openai.com/blog/planning-for-agi-and-beyond

Remember - AI is the solution for Humanity

AI is an important piece of the solution to the real existential risks to humanity.

It will help solve problems such as:

- Healthcare: Identify diseases, personalize treatments

- Transportation: Self-driving vehicles, efficient traffic systems

- Communication: Seamless translation, voice assistants

- Innovation: New scientific discoveries, creativity

- Climate Change: Predict extreme weather, model carbon reduction strategies

AI creating extinction risk for humanity is widely overhyped. AI develops gradually, and the “hard take off” scenario, where AI suddenly achieves superintelligence overnight is not realistic.

If we want humanity to survive and thrive, rather than slowing AI down we must continue to make AI progress go as fast as possible. It is our responsibility to contain the risks while realizing the hopeful potential of AI. As a general-purpose technology, AI creates a lot of opportunities for everyone.

AI is the most powerful technology ever created.