AI has undergone a remarkable evolution, transforming from a speculative notion into a critical component of the modern technological landscape. Toda’s newsletter will cover the key phases in the history of AI, covering:

- Expert Systems

- Machine Learning

- Deep Learning

- Foundation Models

Let’s Dive In! 🤿

AI, often surrounded by hype and exaggerated expectations, is in reality a field marked by gradual and rigorous advancements over many years. By understanding the actual progress and limitations of AI, we can appreciate its true impact and potential without succumbing to hyperbolic narratives.

Expert Systems: The Early Pioneers

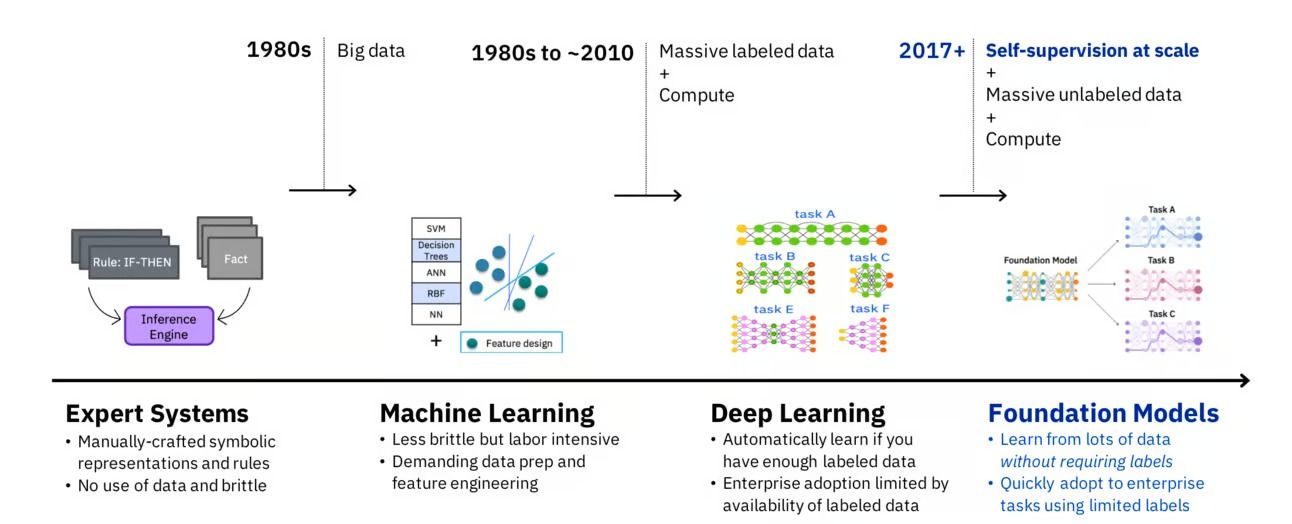

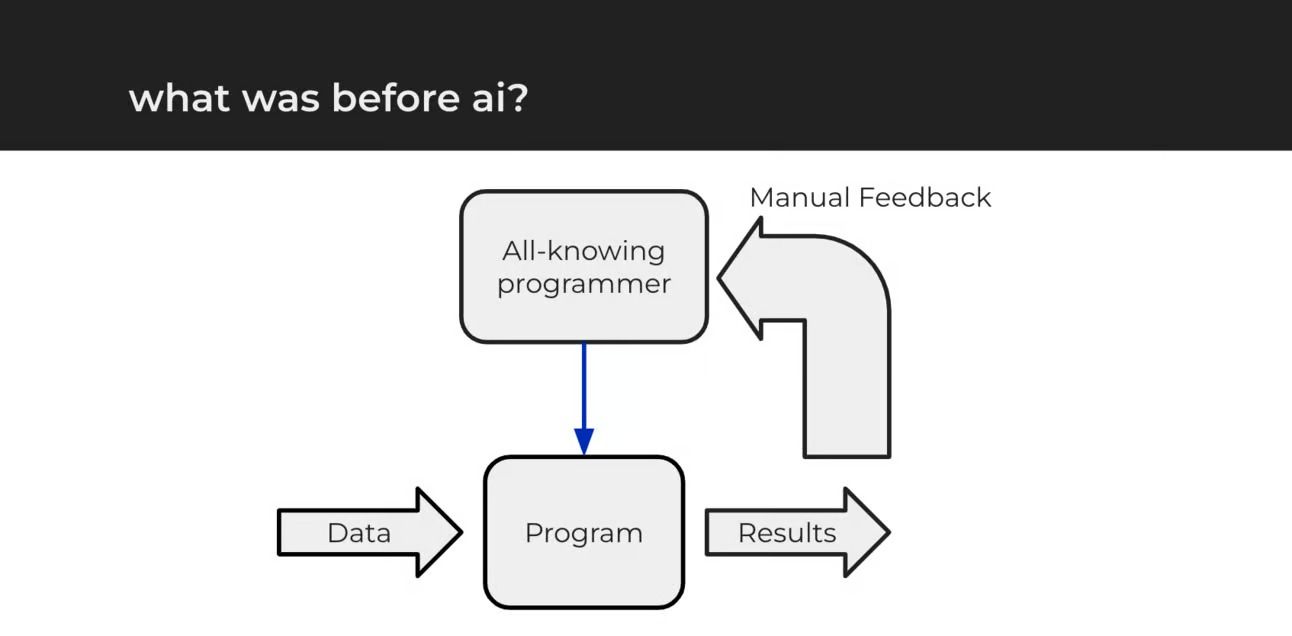

The inception of AI can be traced back to the development of expert systems in the 1970s and 1980s. These systems were among the first practical applications of AI, designed to mimic the decision-making abilities of a human expert. By encoding expert knowledge in the form of rules and heuristics, these systems could perform complex tasks in specific domains such as medical diagnosis, geological exploration, and legal reasoning.

Expert systems represented a significant breakthrough in AI. They could store and manipulate knowledge, making them valuable in industries where human expertise was scarce or expensive. However, their reliance on hardcoded rules and lack of learning capability limited their adaptability and applicability to other domains.

The main disadvantage of expert systems was their lack of flexibility. They relied on predefined rules and couldn't learn or adapt to new information, limiting them to specific domains and requiring extensive manual updates to stay relevant. This rigidity greatly restricted their effectiveness in dynamic or complex environments.

Machine Learning: The Rise of Adaptive Intelligence

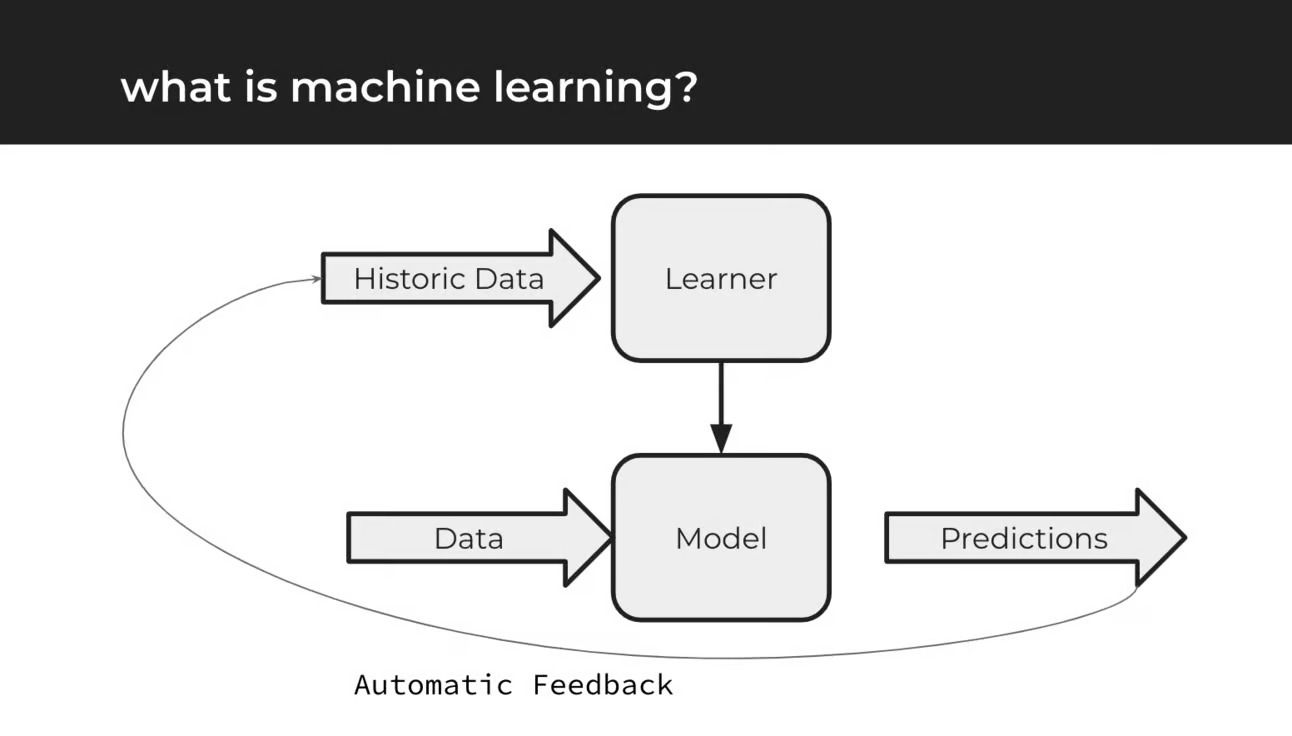

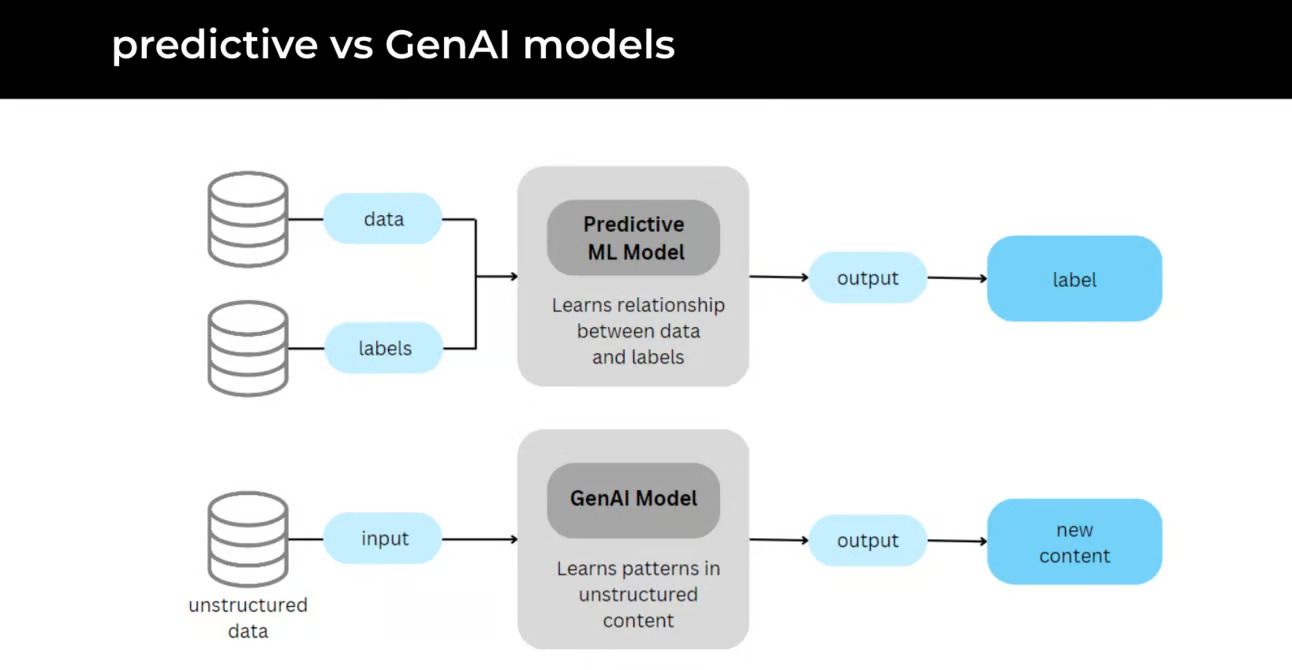

As AI research progressed, the focus shifted towards machine learning (ML) in the 1990s and 2000s. Unlike expert systems, ML algorithms could learn from data, improving their performance over time. This ability to learn and adapt opened up new possibilities for AI applications across various fields.

Machine learning encompasses a range of techniques, including decision trees, support vector machines, and neural networks. These methods enabled computers to make predictions or decisions without being explicitly programmed for specific tasks. Machine learning revolutionized sectors like finance, healthcare, and e-commerce by providing more dynamic and adaptable AI solutions.

The main disadvantage of machine learning is its dependency on large, high-quality datasets. Insufficient or biased data can significantly hinder the performance and accuracy of these models.

Deep Learning: A Leap Forward in Complexity and Capability

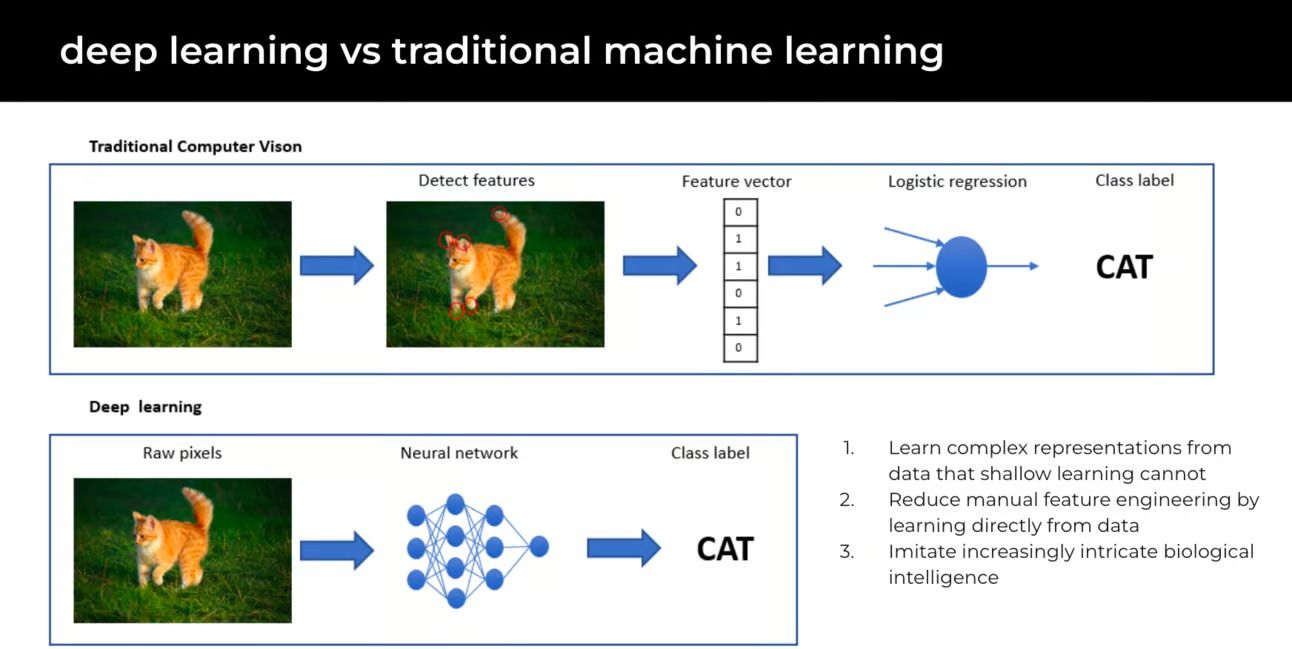

The advent of deep learning in the 2010s marked a significant leap in AI's capabilities. Deep learning, a subset of machine learning, involves neural networks with many layers (hence "deep") that can learn complex patterns in large datasets. These networks were inspired by the structure and function of the human brain.

Deep learning has been instrumental in advancing fields such as natural language processing, computer vision, and speech recognition. Its impact is evident in the development of technologies like autonomous vehicles, sophisticated chatbots, and advanced image and voice recognition systems.

The main disadvantage of deep learning is its need for extensive computational resources and large datasets, making it resource-intensive and potentially less accessible for smaller organizations or projects.

Foundation Models: The Dawn of a New Era

The most recent breakthrough in AI history is the emergence of foundation models, which began gaining prominence in the early 2020s. These models, like GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers), are characterized by their large scale, extensive training on diverse datasets, and adaptability to a wide range of tasks.

Foundation models represent a paradigm shift in AI. They are not trained for specific tasks but rather provide a versatile base that can be fine-tuned for various applications. This approach has led to more efficient and effective AI systems, capable of performing tasks ranging from language translation to content creation with remarkable proficiency.

These models are characterized by several key features:

- Large-Scale Neural Networks: Foundation models utilize extremely large neural networks. These networks can have billions of parameters, making them capable of processing and understanding vast amounts of data.

- Extensive Training on Diverse Datasets: Unlike previous models trained on specific tasks or datasets, foundation models are trained on a wide range of data sources. This training approach enables them to learn a broad spectrum of patterns and representations.

- Adaptability to Multiple Tasks: One of the most remarkable aspects of foundation models is their versatility. The same underlying model can be fine-tuned for a variety of tasks, from language translation to image recognition, without significant modifications to the architecture.

- Dependency on Advanced Hardware: The training and deployment of these large-scale models require immense computational power. This has been made possible by the advancement of GPUs and cloud computing technologies, which provide the necessary processing capabilities.

- Influence on Various Domains: Foundation models are not just an academic curiosity; they have practical applications across diverse fields. They are transforming industries by enabling more accurate predictive models, sophisticated language understanding, and advanced automation.

The main disadvantage of foundation models is their requirement for enormous computational power and data, leading to high costs and potential accessibility issues for smaller organizations.

Conclusion

The history of AI is a story of constant innovation and advancement. From the rule-based expert systems to the versatile foundation models, AI has evolved to become an indispensable part of our digital world. As we look to the future, the potential of AI seems boundless, promising even more groundbreaking developments that will continue to reshape our interaction with technology.