This post is a bit different. We won't talk about No-code AI but just precisely the opposite, hardcore and state-of-the-art AI.

Tesla held last Friday, September 29th the second AI Day, which is an event where they showcase all their AI innovations. Their goal is to explain how their technology works and attract talent to go work for Tesla. The event was widely attended by members of the AI community, and it is clear that Tesla is one of the companies leading the way in applying machine learning to real-world problems. Tesla's advances in autonomous driving are impressive. AI is no longer a futuristic concept, it's something that's being used in a variety of industries today to streamline processes and improve efficiency. And Tesla is one company that's at the forefront of AI technology. At their AI Day event, they announced a number of new AI initiatives that they're working on.

In this post, I'll be breaking down the key takeaways from Tesla AI Day

Tesla AI day was a great success. Some of the highlights included the new Tesla Optimus and advancements in autonomous driving for self-driving cars. The AI Day is a very technical event, where speakers are the same engineers working on incredible AI implementations of their products. The topics discussed here are advanced and complex AI implementations. It is interesting to see how they create frameworks for re-usability in order to scale their technology, but at the core, this is all about coding!

1. The Tesla Optimus: Human-Form Robot

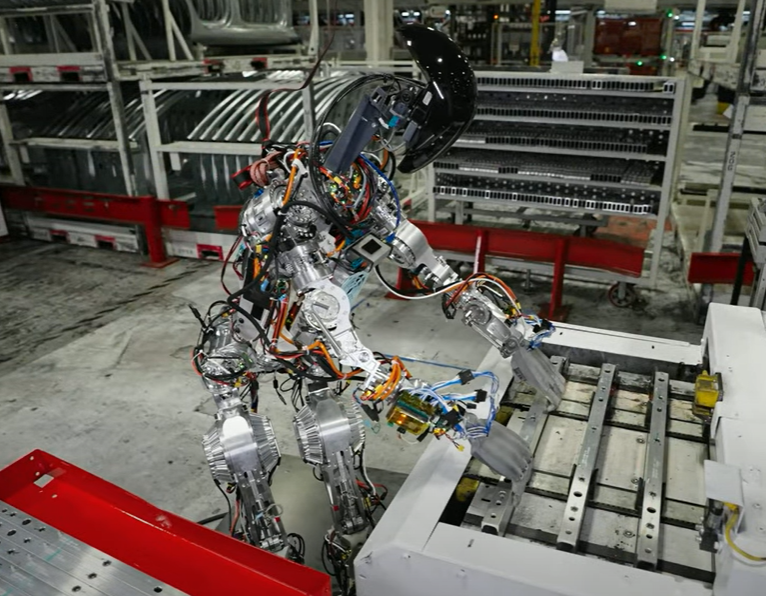

The event started strong, with the introduction of the Tesla Optimus. Optimus, also known as Tesla Bot, is a general-purpose robotic humanoid. All their movements are inspired by human design and biology. The human form provides an incredible range of motion that can do a lot of useful work.

— Tesla (@Tesla) October 1, 2022

The AI running on it is the same technology powering Tesla Autopilot, just re-trained for the Optimus, and that is what will make a big competitive advantage in the long run. They are leveraging all the learnings Tesla already has, from hardware and software. Still, a lot of work needs to be done to refine and improve it but it shows potential. There is a long and exciting road ahead to perfect it. The focus is to make the robot excel in core use cases, starting in a factory environment. The Optimus brain will become smarter over time.

It is true that Boston Dynamics robots show up more impressive but Elon said:

Optimus may not put on the best "demonstration", but will deliver with: a brain, inexpensively and high volume. Unlike a highly expensive demo with low-vol production.

Elon suggested limits to economic growth will be removed via Optimus Robot. They will be able to manufacture as many as needed, in the millions of volume, and cost less than a car.

If @Tesla's #Optimus replaces JUST factory jobs in the USA (none others): it could replace $500b of LABOR $ PER YEAR.

— Meet Kevin (@realMeetKevin) October 1, 2022

$500b/$20k=would be 25 MILLION bots. At a 30% margin, @elonmusk could create $150 BILLION in gross profit for $TSLA (assuming 1yr of labor replaced)#AIDay2022 pic.twitter.com/EdASA4yrO0

From Concept to Scale Manufacturing

Their goal is to reduce the part count and power consumption of every element possible.

Porting the AI from Cars to Optimus

The way Tesla sees this is by moving from a robot on wheels to a robot on legs. Many components are pretty similar and others required a full re-design. For example, the computer vision system is ported directly from Autopilot, the only thing that changed is the training data. This shows the power of portability of AI technologies across similar use cases.

Indoor navigation is something they are working hard and it is very different from cars. To make the robot walk they need coordinated motion, balance and physical self-awareness, and an understanding of the environment. Navigation is different because there is no GPS and the environment is very different than the streets.

2. Full Self Driving

With Full Self-Driving (FSD) capability, you will get access to a suite of more advanced driver assistance features, designed to provide more active guidance and assisted driving under your active supervision. It still requires active driver supervision and does not make the vehicle autonomous.

Every Tesla car that has been built over the last several years has all the hardware components for full self-driving. They've been working on the software to provide higher and higher levels of autonomy. Roughly in 2021, they had 2,000 customers on Full Self Driving Beta software. Now in 2022, there are 160,000. To get there they worked hard with:

- 35 releases

- 281 Models shipped

- 18,659 Pull Requests

- 75,778 Models Trained

- 4.8M Clip Dataset

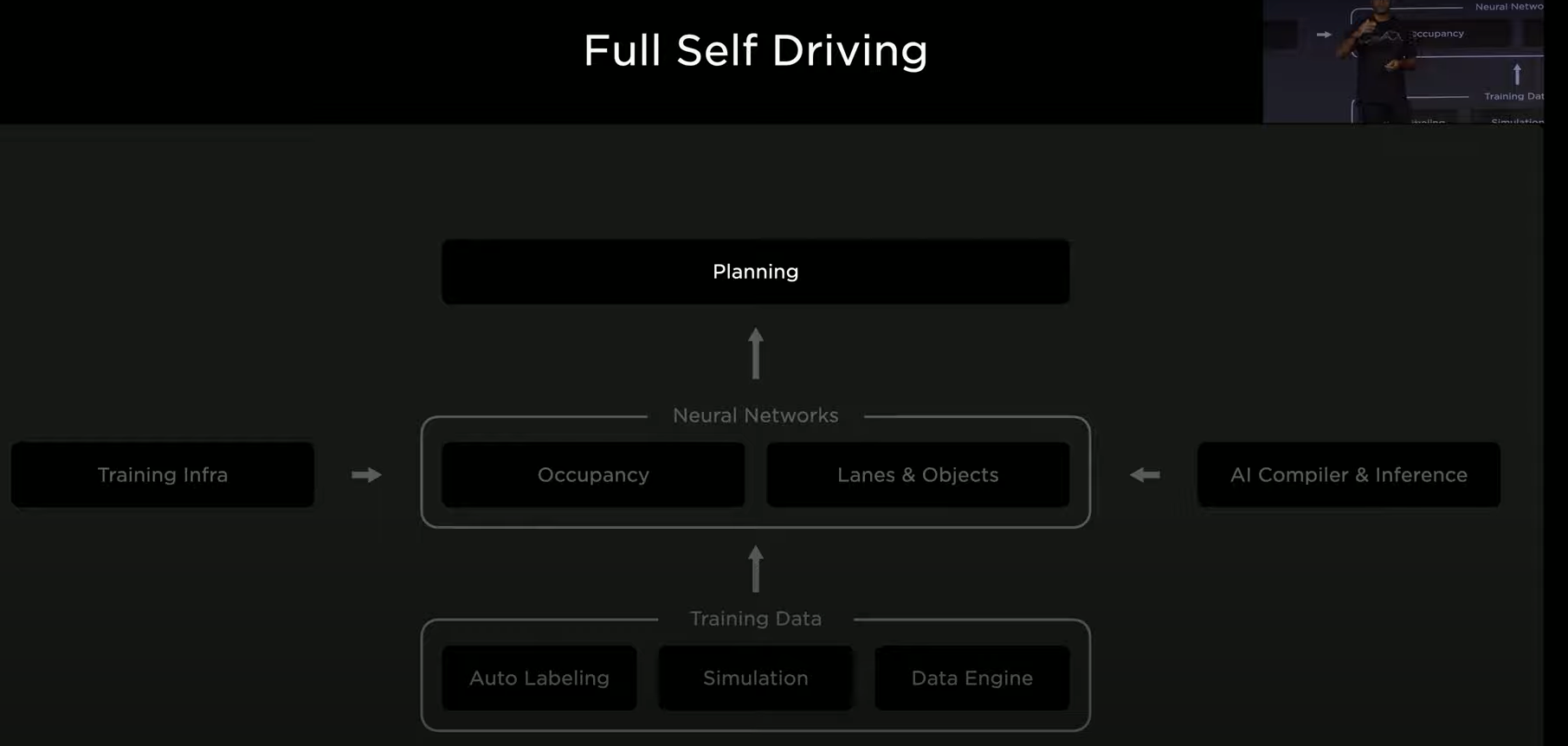

All the technology starts with the camera streams, that run through their neural networks and it all comes to the car. It is the car itself that produces all the outputs for self-driving. The planning software drives the car and makes sense of all the outputs of the neural networks.

The engineering leads presented progress in all the areas of the FSD stack at Tesla.

2.1 FSD - Planning

The demonstration showed how Tesla Autopilot deals with an intersection where a pedestrian is crossing unexpectedly without a crosswalk. Humans are good at looking at a scene, understanding all the object interactions, evaluating the most promising ones, and generally making good decisions. In this case, there are several approaches that the car could take and the evaluation of all those interactions are not trivial.

The lead engineer explains how Interaction Search is able to analyze lanes, occupancy, and moving objects and run an optimization model taking into account multiple constraints. They moved from Physic Based Numerical Optimization to a Neural Planner, a set of lightweight queryable network trained on Human Demonstration from the Fleet and Offline solvers with relaxed time limits. This allowed the planner to make decisions every 100us per action, in real-time. Running traditional optimization models the performance was ~ 1 to 5 ms per action, which is not good enough for a self-driving use case.

The trajectory generated by the planner then runs through a trajectory scoring which reviews things like Collision Check, Comfort Analysis, Intervention Likelihood, and Human-Like Discrimination. That score helps make the decision on which trajectory the car should take. All this happens in real-time... thousands of calculations based on optimization, models trained on millions of real-life situations, and computer-generated simulations.

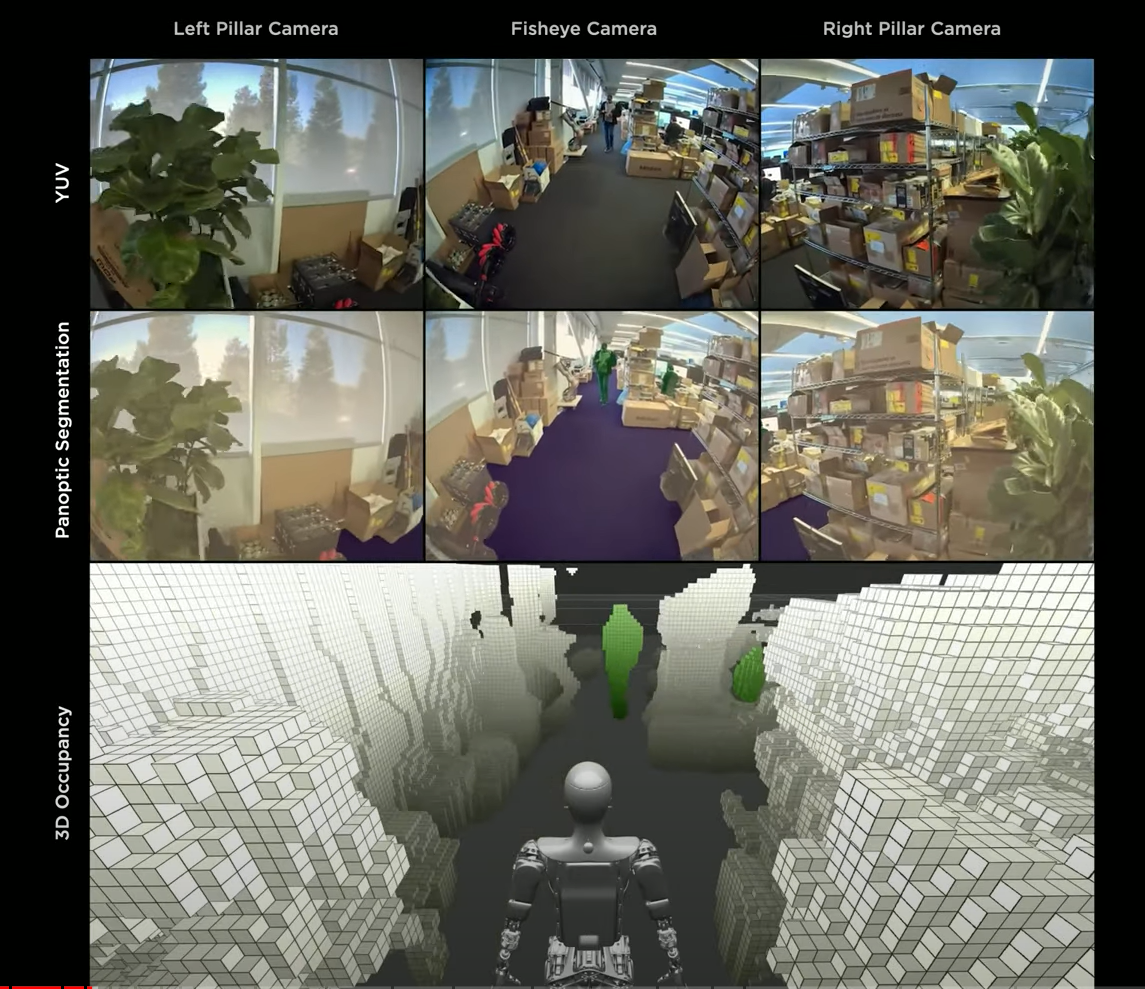

2.2 FSD - Occupancy Network

The Occupancy Network is the solution to model the physical world. Based on the 8 video cameras on the car, the Occupancy Network maps the 3D representation of the car's surroundings. The Tesla team calls their architecture the future of computer vision, given how advanced their system has become. They announced they moved away from ultrasonic sensors to fully bet con computer vision combined with neural networks. The occupancy network takes video streams of the cameras to provide volumetric occupancy and understand instantaneously the environment. It also understands the flow of objects and predicts their future moves.

Today, we are taking the next step in Tesla Vision by removing ultrasonic sensors (USS) from Model 3 and Model Y. We will continue this rollout with Model 3 and Model Y, globally, over the next few months, followed by Model S and Model X in 2023.

This system is very efficient, runs entirely in the car, and is optimized for memory and computing use, running all in real-time in 10ms.

Along with the removal of USS, we have simultaneously launched our vision-based occupancy network – currently used in Full Self-Driving (FSD) Beta – to replace the inputs generated by USS. With today’s software, this approach gives Autopilot high-definition spatial positioning, longer range visibility and ability to identify and differentiate between objects. As with many Tesla features, our occupancy network will continue to improve rapidly over time.

The occupancy network is trained with a large Auto-Labeled Dataset, without any human in the loop.

2.3 FSD - Lanes and Objects

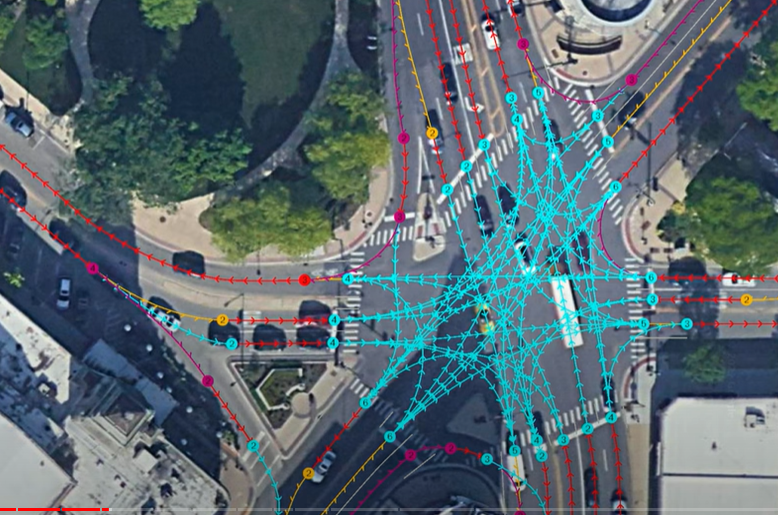

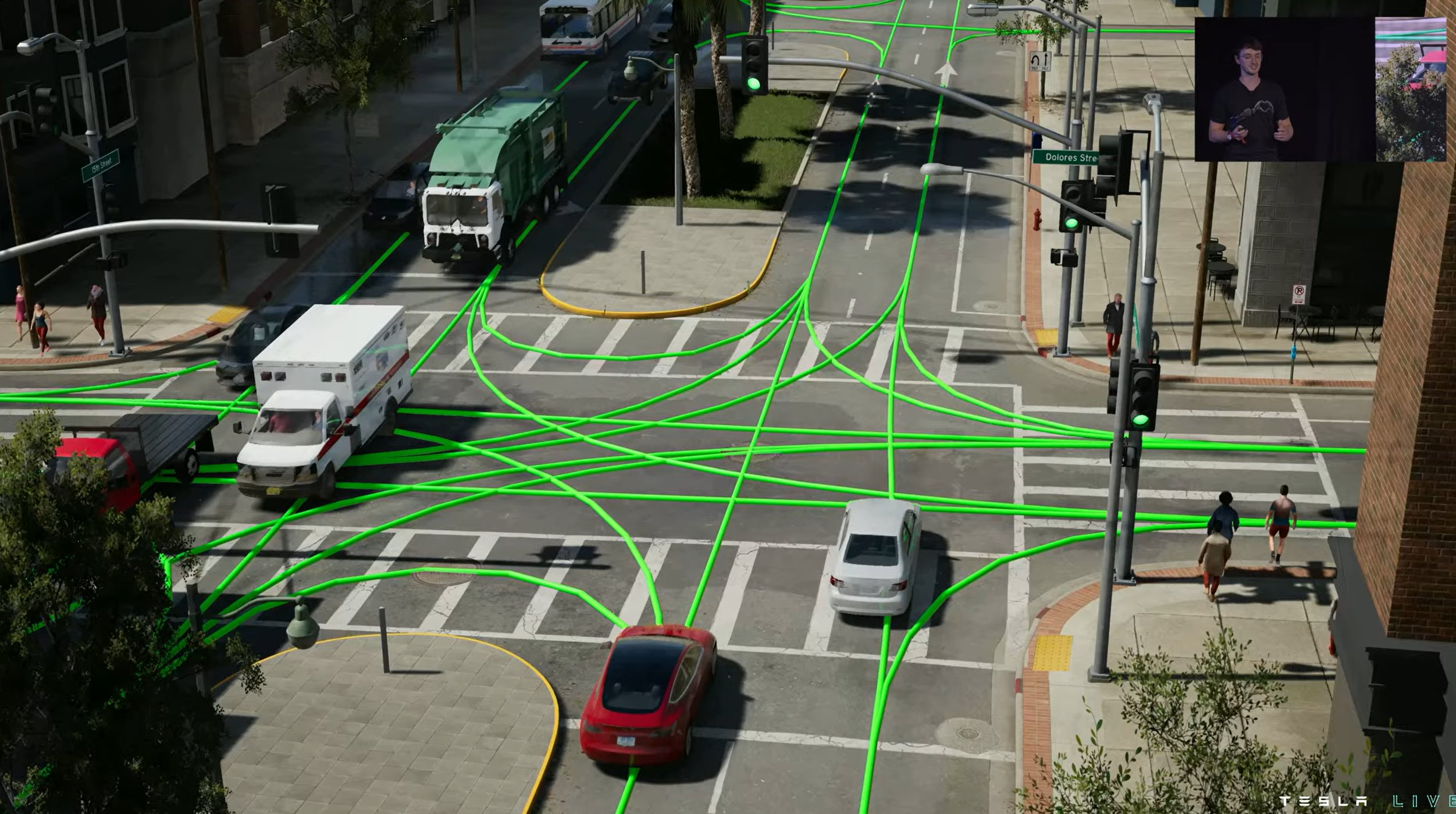

How do they predict Lanes? With a Lane Detection Neural Network. In the early days of AutoPilot, they modeled lane detection as an image-based instance segmentation task. This model works for highly structured roads such as highways, but today, Tesla is trying to build a system that is much more capable of handling complex maneuvers. Specifically, they want to be able to make left and right turns at intersections, where the road topology can be more complex and diverse. Applying simplistic models the system totally broke down.

What they try to do is produce the full set of instances and their connectivity to each other, all with a Neural Network capable of producing a graph like in the image below.

The Lane Detection Neural Network is made of three components. The first is a set of layers that encode the video streams from the 8 video cameras on the vehicle and produce a rich visual representation. Second, they enhance that visual representation with map data. These two components create a Dense World Tensor that encodes the world, but what they need is to convert that into a set of lanes and connectivities. To solve that they created an Autoregressive Decoder, a new Language Component developed at Tesla, called "The Language of Lanes". They modeled the task as a language problem, applying state-of-the-art solutions for language.

The result? A set full map of the lanes and their connectivity comes straight from the Neural Network.

This "Language of Lanes" can be also applied to Optimus and walk lanes. You could ask Optimus to go somewhere, and then it will find different pathways and it will figure out how to get somewhere. It is an extensible and highly customizable framework for navigation and The Planner.

2.4 FSD - Auto Labeling

As you can see, it is all about Neural Networks and if we know something about Neural Networks is that they need a lot of training data. Tesla is collecting data and they can get it from more than 500k trips a day. However, combining all that data into a training form is a very challenging technical problem. To solve it, Tesla's engineers tried different ways of manual and Auto Labeling. They use different Auto-Labeling frameworks to solve their use cases. During the presentation, they focused on the lane's use case.

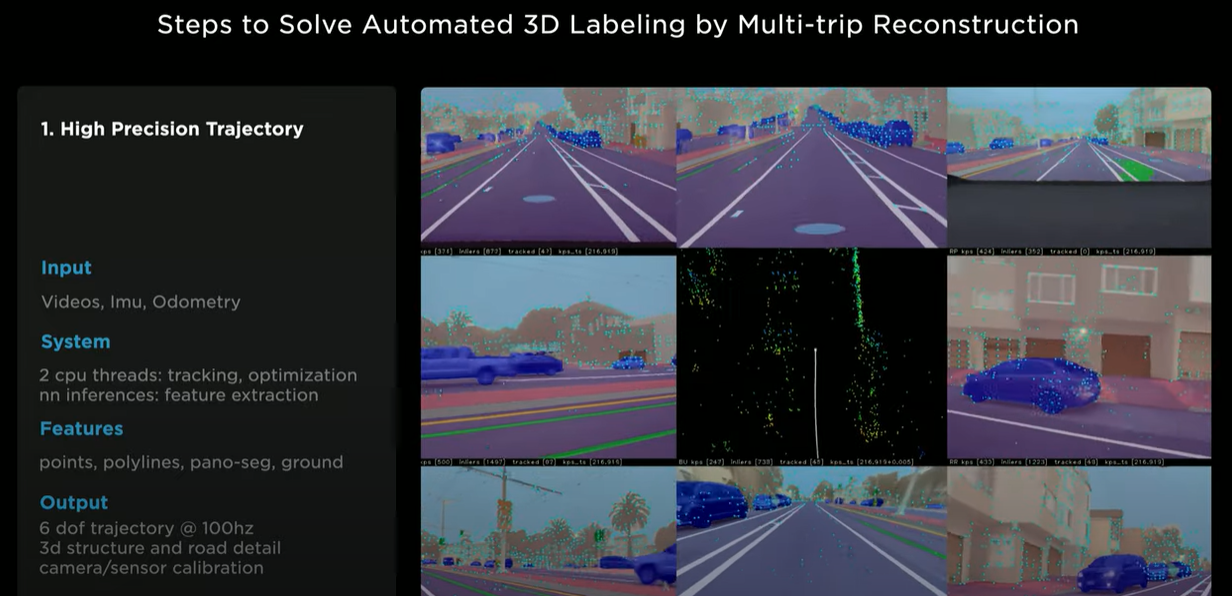

After years of work, they came up with an Auto Labeling Machine that provides a high-quality, diverse, and scalable solution. The result: they can now get 5 Milion Hours of Manual Labeling where replaces by 12 hours on the cluster for 10k Trips. How did they do it? With three big steps:

- High Precision Trajectory and Structure recovery.

- Multi-Trip Reconstruction

- 3. Auto-Labeling New Trips

2.5 FSD - Simulation

Simulation plays an important role when Tesla engineers need data that is difficult to source or too hard to label. Whoever, 3D scenes are too slow to produce, for example, they showed a complex intersection scene of Market St in San Francisco which took 2 weeks for 3D artists to complete. That is too slow. During the presentation, Tesla engineers presented new tooling called the Simulation World Creator to generate scenes on demand in less than 5 minutes. That's 1,000 times faster than before.

To do that, they use the ground truth labels in the simulated world creator tooling. They can for example convert the lanes into topologized and draw geometry to even paint the roads or pave them. They can get island geometry populated with randomized items. They can combine that as well with map data to bring things such as traffic lights or stop signs. They can even add accurate street names and add signs. Using lane graphs they can create lane connectivity. They can finally add randomized traffic permutations inside the simulator. It all finally runs on the Unreal Engine.

They basically created Simulation-as-a-service. This is all automatic and there is no artist in the loop. It happens within minutes. With this, they can create any variations they want, like changing object placement, adding rain, or drastically changed like the location of the environment. They can create infinite targeted permutations to train the system and create new data for new situations that maybe were not have been collected or labeled before.

2.6 FSD - Training Infrastructure

The training required for FSD is based on the video, and video is more complicated than text or image. That's why Tesla had to go end-to-end from the storage layer all the way to the GPU to design a system that is optimized for big scale. The data that comes from the fleet goes straight into the training and it is not pre-processed at all. Their Optimized Video Model Training is an extension to Pytorch.

It takes 1.4 billion frames to train the Occupancy Network. It would take 100,000 hours to train on a single GPU. Of course, that is not an option and they have to parallelize the process. Tesla's Model infrastructure uses a super-computer. In fact, Tesla built in-house 3 super-computers with 14k GPUs, 4k for auto labeling, and 10k for training.

All the videos take 30 Petabytes of Distributed managed Video Cache. Don't think of those datasets as fixed like in a classic ImageNet, it is a very fluid thing where there are 500k videos rotating in and out of the cluster every day and they track 400k video instantiations every second. That's a lot of calls. They created a new File Format called .smol, to handle video and Ground-Trush. It is 11% smaller, 4x less iops.

You cannot just buy 14k GPUs and 30PB of Flash Storage, and just throw it there, it takes a lot of work to put all those pieces together. They did a huge continuous effort to optimize the computing and they are able to re-train models such as Occupancy Networks at 2.3x speed. Now they can scale the system and train in hours instead of days or weeks.

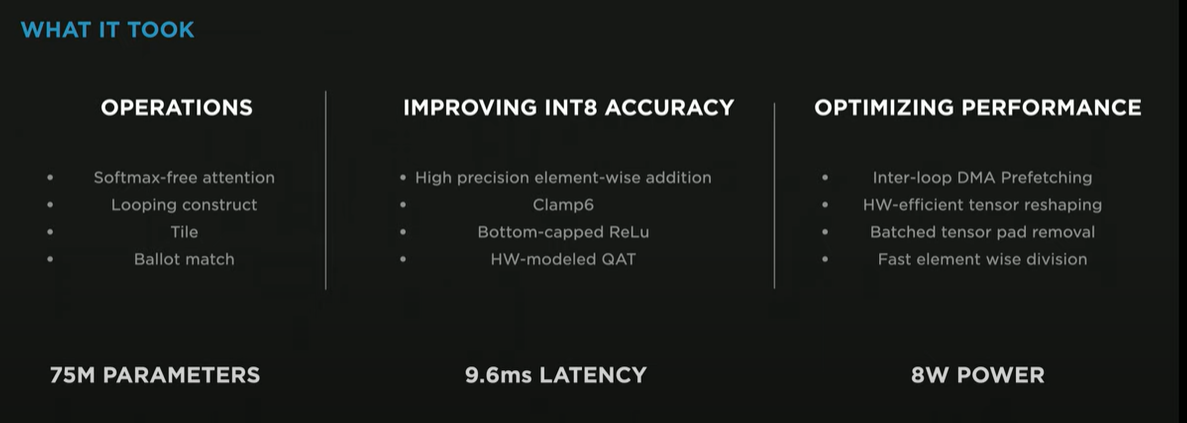

2.7 FSD - AI Compiler & Inference

Every millisecond really matters to be able to react when we are driving. Tesla engineers presented how they optimize neural networks to minimize latency. The example showed was for the FSD Lanes Network in the Car. All those networks run directly in the TRIP engine, which is the Neural Network accelerator that runs in the car. Within the car, there are several networks such as Moving Object Network, Occupancy Network, Traffic Controls & Road Sign Network, and Path Planning Network. To give a sense of scale, all that combined is 1B Parameters combined producing 1k Neural Network Signals.

Tesla built a compiler just for Neural Networks to run in a parallel fashion, allowing them to optimize all the layers of software.

2.8 FSD - Dojo Super Computing Platform

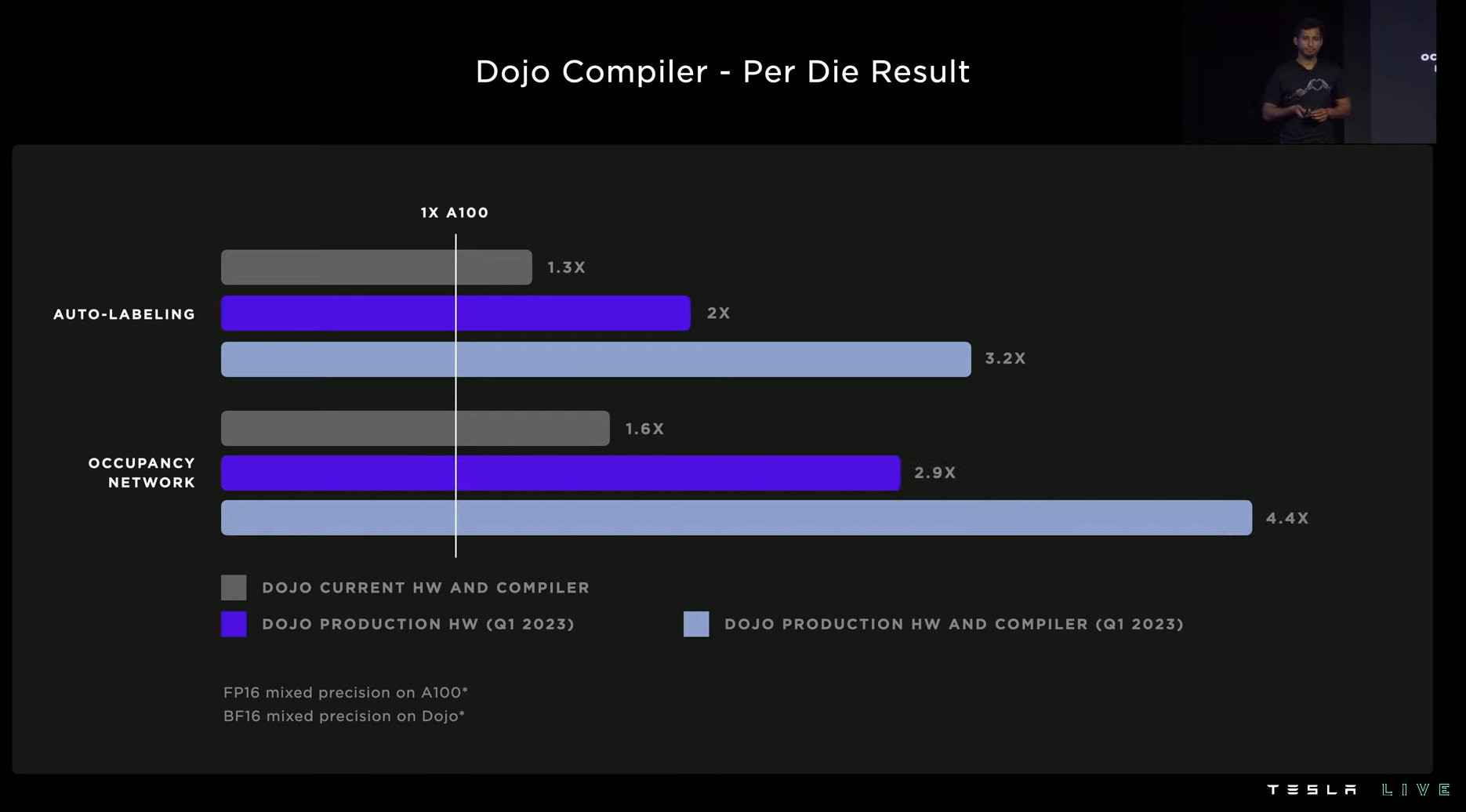

Why is a car company building a supercomputer for training neural networks? The initial motivation was to reduce the latency to train models. Some of the large neural networks took over a month of training. That slowed down their ability to rapidly explore alternatives and evaluate them. The goal was to create a cost-competitive and energy-competitive way.

They claim they have a machine that is different from anything that's available today. It can scale with no limits so it can meet the needs of the Autopilot team.

The vision for Dojo is to build a single unified scalable compute plane. The software will see a single computer that can access fast memory in a uniform and high bandwidth with low latency. Dojo is somewhere between 2-3x the performance of GPU. Dojo tile is 1/5th the cost of a GPU box. Neural Networks that used to take a month, can now be trained in a week.

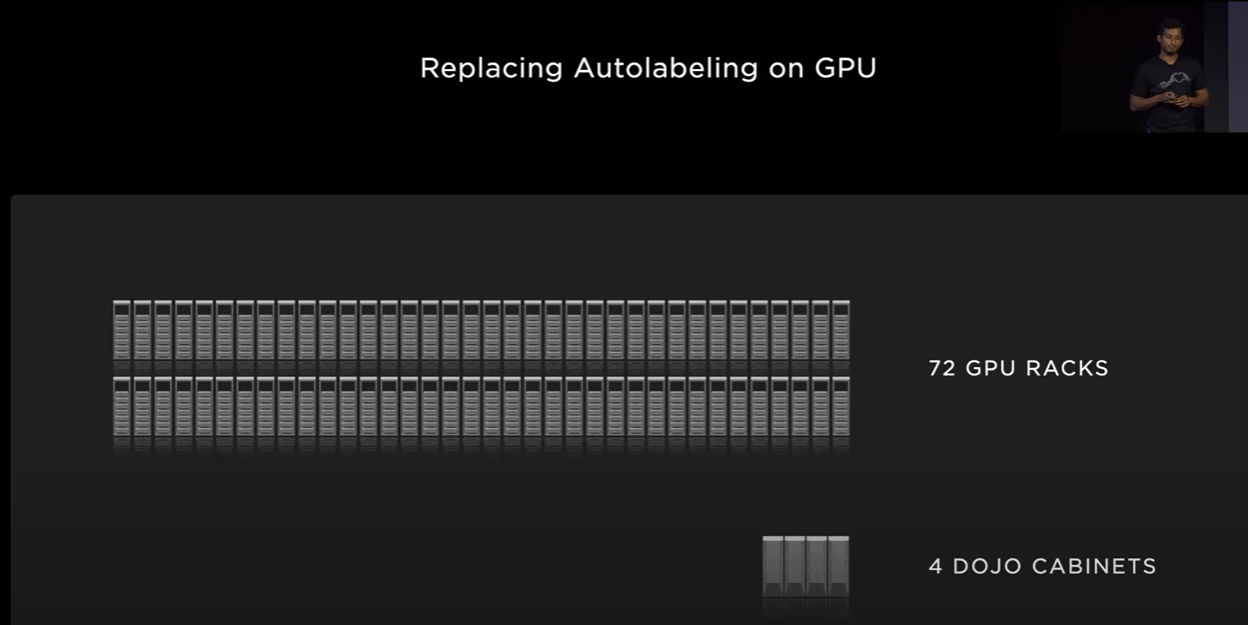

Tesla expects to replace 72 GPU racks with over 4k GPUs with just 4 Dojo cabinets and provide the same throughput and performance. Those will be available in Q1 2023 and will be part of the 1st Exapod. This will more than double Tesla's Auto Labeling capacity. This first one is part of a set of 7 exapods that will be built in Palo Alto

Final Conclusion

Tesla at its heart is a hardcore technology company, not just a car company. All across the company, people are working hard on science and engineering to advance the fundamental understanding of methods to build cars, energy solutions, robots, and more to improve the human condition around the world.

Tesla has a Fail-Fast philosophy that is allowing them to push its boundaries. It is mind-blowing to see their pace of innovation and very exciting to see how far AI has gone with custom hardware and software. The event closed with 1 hour of Q&A where Elon Musk and the engineers were taking hard questions about their technical implementations.

Feel free to watch the full 3+ hours event below.