Artificial Intelligence (AI) is rapidly transforming the field of medicine, particularly in diagnostics and medical imaging. Medical imaging is crucial in the detection, diagnosis, and treatment of various illnesses. AI algorithms can analyze medical images, detect patterns, and provide insights into medical conditions that may be challenging to identify by the human eye. In this blog post, we will discuss the impact of AI in diagnostics and medical imaging and its potential to revolutionize healthcare.

📷 AI in Medical Imaging

Medical imaging is a vital tool in the diagnosis and treatment of diseases, and it has been advancing steadily over the years. However, the interpretation of medical images is a complex task that requires specialized knowledge and experience. AI algorithms can analyze vast amounts of medical images and identify patterns that may be challenging for radiologists to detect. The use of AI algorithms in medical imaging can improve diagnostic accuracy, speed up the interpretation process, and reduce errors.

For instance, AI algorithms can analyze medical images, such as X-rays, CT scans, and MRIs, to identify the early signs of diseases, such as cancer, Alzheimer's, and cardiovascular diseases. In some cases, AI algorithms can detect abnormalities in medical images that may have gone unnoticed by a radiologist. AI can also help with the quantification and analysis of medical images, such as measuring the size of a tumor, detecting changes in the structure of the brain, or evaluating blood flow patterns.

AI algorithms can also help with medical image segmentation, a process that involves separating an image into different regions or objects. Medical image segmentation is useful in identifying specific structures, such as tumors, blood vessels, and organs, and in determining the boundaries of these structures. AI algorithms can perform medical image segmentation accurately and quickly, which can improve the diagnosis and treatment of various diseases.

🔬 AI in Diagnostics

In addition to medical imaging, AI algorithms can also improve diagnostics in other areas of medicine. For example, AI algorithms can analyze electronic health records (EHRs) to identify patterns in patient data, such as symptoms, medical history, and test results. By analyzing this data, AI can help physicians make more accurate diagnoses, predict the risk of developing specific diseases, and recommend personalized treatment plans.

AI can also help with the interpretation of laboratory test results, such as blood tests, urine tests, and genetic tests. AI algorithms can analyze these results and provide insights into specific medical conditions, such as infections, cancers, and genetic disorders. AI can also help with the interpretation of medical scans, such as electrocardiograms (ECGs), which can detect irregular heartbeats and other heart conditions.

🔍 The Essential Data Needed for AI in Medical Imaging

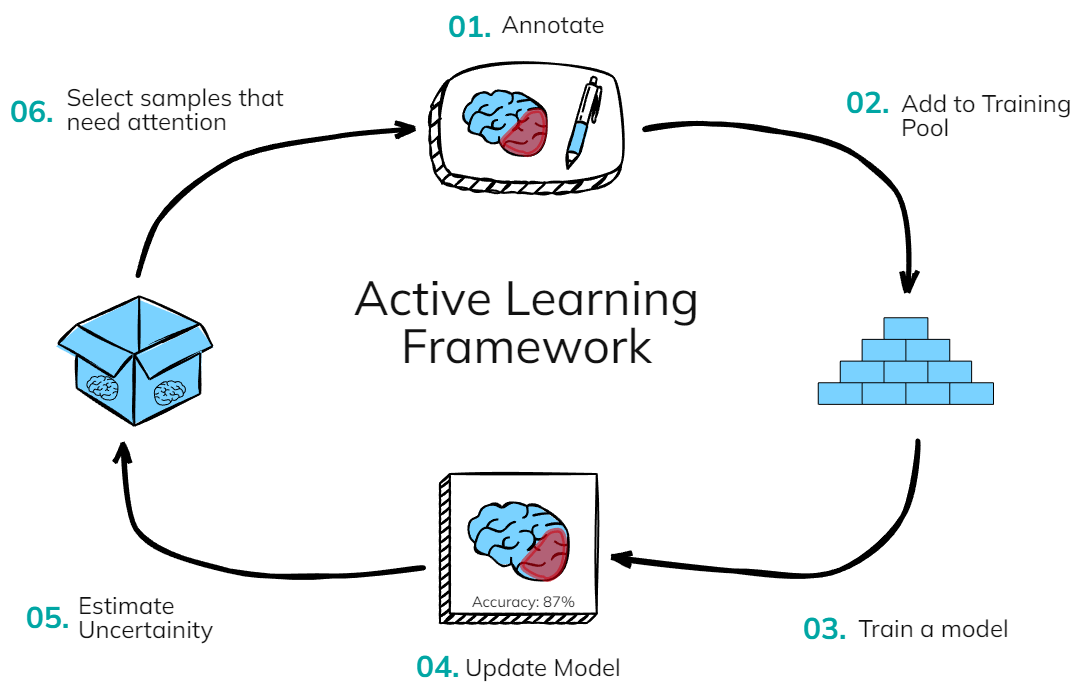

The success of AI algorithms in diagnostics and medical imaging depends on the quality and quantity of data used to train them. Medical imaging data, such as X-rays, CT scans, and MRIs, are essential for training AI algorithms for image analysis. These images should be annotated, which means that the relevant structures, such as tumors or blood vessels, should be labeled. This annotation process can be time-consuming, and there may be variations in labeling between different radiologists, which can affect the accuracy of the algorithm.

In addition to medical imaging data, AI algorithms can also benefit from patient data, such as electronic health records (EHRs), medical histories, and genetic data. This data can help to identify patterns in patient symptoms, medical history, and test results, which can aid in diagnosis and treatment.

🧠 Exploring the Algorithms to Use

There are several types of AI algorithms that can be used in diagnostics and medical imaging, including deep learning, machine learning, and computer vision. Deep learning algorithms, such as Convolutional Neural Networks (CNNs), are particularly useful for image analysis, as they can identify patterns and structures in medical images. CNNs have been used successfully in the detection of lung cancer, breast cancer, and skin cancer, among other conditions.

Machine learning algorithms, such as Decision Trees and Random Forests, can be used to analyze patient data and predict the likelihood of a particular disease or condition. These algorithms can be trained using patient data, including symptoms, medical histories, and test results, to identify patterns that are associated with specific diseases or conditions.

Computer vision algorithms can be used to assist with medical image segmentation, which involves separating an image into different regions or objects. These algorithms can detect edges, contours, and shapes in medical images, which can aid in identifying specific structures, such as tumors or blood vessels.

🔮 Foundation Models for medical image analysis

Foundation models, such as GPT-4 and its variants, are designed for natural language processing (NLP) tasks, and their primary use case is language-related applications. However, these models can be fine-tuned for specific tasks, including diagnostics and medical imaging analysis.

One way that foundation models can be used for medical imaging analysis is through the generation of natural language descriptions of medical images. This can help healthcare professionals to interpret and communicate complex medical information, particularly when dealing with non-expert audiences, such as patients or family members. For example, a foundation model can be trained to generate a description of a medical image that identifies specific structures, such as organs or blood vessels, and highlights any abnormalities or changes that are present.

Foundation models can also be used to assist with medical image segmentation, which involves separating an image into different regions or objects. This can aid in identifying specific structures, such as tumors or blood vessels, and in determining the boundaries of these structures. For example, a foundation model can be fine-tuned on medical image segmentation tasks to identify specific structures in an image and provide an accurate segmentation map. Meta AI recently announced an open-sourced Segment Anything Model (SAM), a new AI model that can "cut out" any object, in any image, with a single click.

Additionally, foundation models can be used to analyze electronic health records (EHRs) and other patient data to assist with diagnostics. By analyzing patient data, foundation models can identify patterns and relationships that may be difficult for healthcare professionals to detect. This can aid in the diagnosis of specific diseases and conditions, as well as in the development of personalized treatment plans.

🛠️ From Concept to Reality, Let's Talk about Implementation

Implementing AI in diagnostics and medical imaging requires careful consideration of several factors, including data privacy, accuracy, and safety. It is essential to ensure that the data used to train AI algorithms is anonymized to protect patient privacy. In addition, the accuracy of the algorithm must be validated and tested thoroughly before it is used in a clinical setting.

It is also important to consider potential risks associated with AI in healthcare. AI algorithms can produce false positives or false negatives, which can lead to incorrect diagnoses or treatment recommendations. In addition, there is a risk of bias in AI algorithms if the training data is not representative of the patient population. It is crucial to monitor the performance of AI algorithms regularly and ensure that they are producing accurate and unbiased results.

AI algorithms can also assist with the diagnosis of rare diseases. Rare diseases are often challenging to diagnose due to their rarity and the lack of knowledge about their symptoms and causes. AI algorithms can analyze patient data, medical images, and genetic data to identify patterns that may be associated with rare diseases. By doing so, AI can help physicians make more accurate diagnoses and provide better treatment options for patients.

🌐 Open Source Tools: MONAI

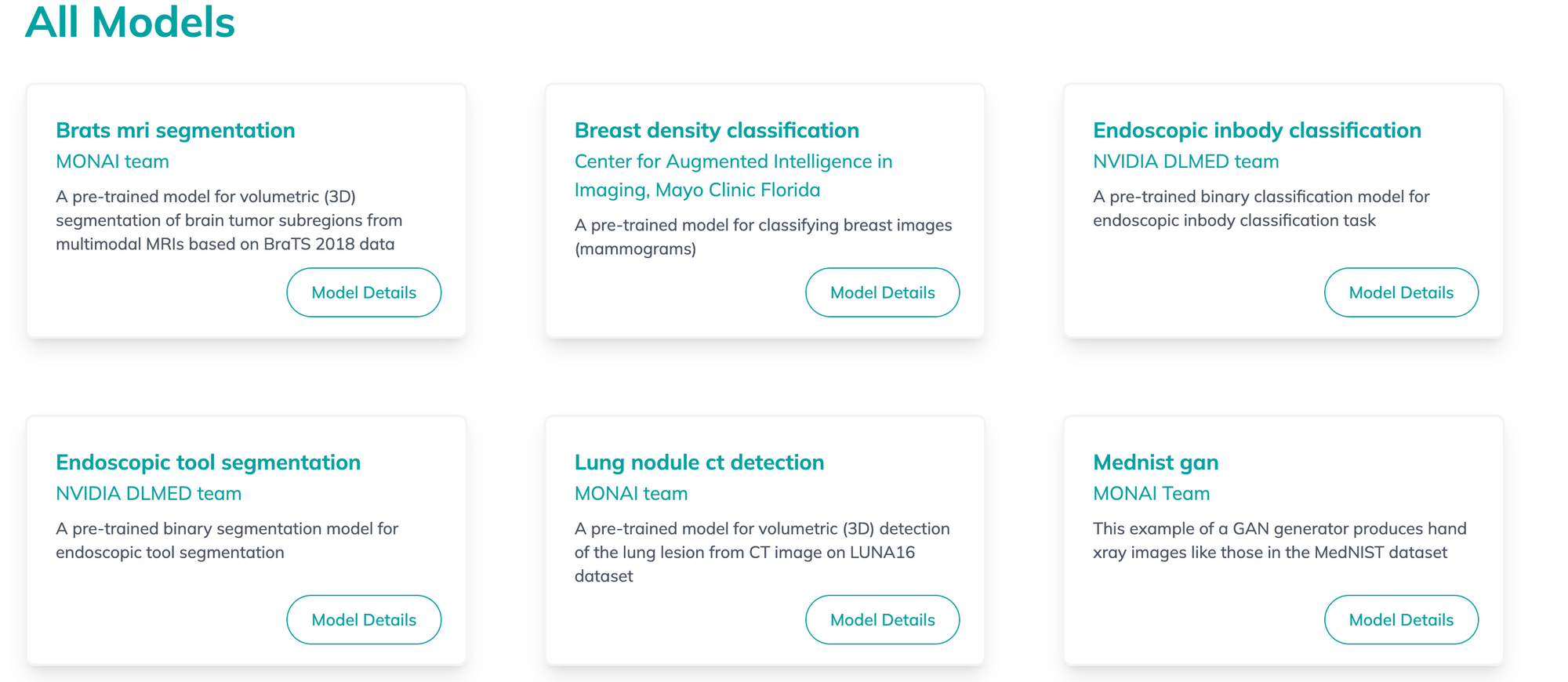

MONAI is a PyTorch-based, open-source framework for deep learning in healthcare imaging. It is designed to be user-friendly, reproducible, and easy to integrate with existing efforts. MONAI provides a variety of tools for medical image analysis, including data loading, preprocessing, visualization, and training. It also includes a number of pre-trained models that can be used for a variety of tasks, such as segmentation, classification, and registration.

MONAI is developed and maintained by a community of researchers and developers from academia, industry, and clinical settings. The project is hosted on GitHub and is released under the Apache 2.0 license.

Here are some of the key features of MONAI:

- User-friendly: MONAI provides a number of features that make it easy to use, even for users with no prior experience with deep learning. These features include: Concise and easy-to-read code, Extensive documentation, and A variety of tutorials and examples

- Reproducible: MONAI is designed to be reproducible so that researchers can easily share and compare their results. This is achieved through a number of features, such as a well-defined data format, a consistent set of evaluation metrics, and a comprehensive set of logging and visualization tools

- Easy to integrate: MONAI is designed to be easy to integrate with existing efforts. This is achieved through a number of features, such as a well-defined API, a number of pre-trained models, and a comprehensive set of tools for data loading and preprocessing.

MONAI is a powerful tool for medical image analysis. It is easy to use, reproducible, and easy to integrate with existing efforts. MONAI is a valuable resource for researchers and developers who are working to develop new AI-powered solutions for healthcare.

👾 A Python example using MONAI

To get started, first install MONAI and PyTorch:

pip install monai torch

In this example, we'll create a simple convolutional neural network (CNN) to classify 3D medical images (e.g., CT scans) as either normal or abnormal. We'll use synthetic random data for simplicity, but in practice, you'd want to use a real dataset.

import numpy as np

import torch

from torch.utils.data import Dataset, DataLoader

from monai.networks.nets import DenseNet

from monai.transforms import Compose, AddChannel, ScaleIntensity, ToTensor

# Create a custom dataset class

class SyntheticDataset(Dataset):

def __init__(self, num_samples=100, img_shape=(64, 64, 64), num_classes=2):

self.num_samples = num_samples

self.img_shape = img_shape

self.num_classes = num_classes

def __len__(self):

return self.num_samples

def __getitem__(self, idx):

img = np.random.rand(*self.img_shape)

label = np.random.randint(self.num_classes)

return img, label

# Define the MONAI transforms

transforms = Compose([

AddChannel(),

ScaleIntensity(),

ToTensor()

])

# Create the dataset and data loader

dataset = SyntheticDataset()

dataloader = DataLoader(dataset, batch_size=4, num_workers=2)

# Create the model

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = DenseNet(

spatial_dims=3,

in_channels=1,

out_channels=2,

init_features=32,

).to(device)

# Set the loss function and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=1e-4)

# Train the model

num_epochs = 5

for epoch in range(num_epochs):

print(f"Epoch {epoch + 1}/{num_epochs}")

model.train()

for i, (inputs, labels) in enumerate(dataloader):

inputs = transforms(inputs).to(device)

labels = labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

print(f"Batch {i + 1}, Loss: {loss.item():.4f}")

In this example, we create a synthetic dataset with random 3D images and labels. We define a series of MONAI transforms to preprocess the data and convert it to tensors. We create a 3D DenseNet model using MONAI's DenseNet class and train the model using the synthetic data.

Keep in mind that this example uses synthetic data, and you'll need to replace the SyntheticDataset class with your own dataset class that loads real medical images. Additionally, you may need to experiment with different architectures, hyperparameters, and data augmentation techniques to improve the model's performance. You can find more examples and tutorials in the MONAI documentation.

💻 No-code AI Tools for Healthcare

There are several no-code AI tools that can be used for diagnostics and medical imaging, making it easier for healthcare professionals to implement AI without the need for advanced technical skills. These tools can assist in the analysis of medical images, patient data, and the identification of patterns that can aid in diagnosis and treatment. Some of the best no-code AI tools for this use case include:

- Google AutoML: Google AutoML is a suite of AI tools that can be used to build custom machine learning models without the need for coding expertise. It can be used to analyze medical images and patient data and can be trained to detect specific patterns associated with diseases and conditions.

- IBM Watson Studio: IBM Watson Studio is an AI platform that enables healthcare professionals to build and deploy machine learning models quickly and easily. It can be used to analyze medical images and patient data and can be trained to identify specific structures and patterns associated with diseases and conditions.

- Microsoft Azure AutoML: Microsoft Azure AutoML is a cloud-based platform that provides healthcare professionals with access to a range of AI tools and services. These tools can be used to analyze medical images and patient data and can be trained to detect specific patterns associated with diseases and conditions.

- NVIDIA Clara: NVIDIA Clara is an AI platform that is specifically designed for medical imaging analysis. It provides healthcare professionals with a range of pre-trained models that can be used to analyze medical images and identify specific structures and patterns associated with diseases and conditions.

- Amazon SageMaker: Amazon SageMaker is an AI platform that can be used to build and deploy machine learning models for medical image analysis and patient data analysis. It provides healthcare professionals with access to a range of pre-built models and tools, making it easier to implement AI in healthcare.

Startups are also innovating. Let's highlight RedBrick which offers a collaborative and rapid Medical Data Annotation purpose-built platform to help Healthcare AI teams build high-quality training datasets.

🤩 Conclusion and Benefits

AI is revolutionizing the field of medicine, particularly in diagnostics and medical imaging. AI algorithms can analyze vast amounts of medical data and identify patterns that may be challenging for physicians to detect. Some of the benefits are the following:

- Improved accuracy: AI algorithms can be trained to identify abnormalities in medical images with a high degree of accuracy, even when they are difficult to see by the naked eye. This can lead to earlier detection and treatment of diseases, which can improve patient outcomes.

- Increased efficiency: AI algorithms can automate many of the tasks involved in medical imaging, such as image analysis and interpretation. This can free up radiologists and other healthcare professionals to focus on other tasks, such as patient care.

- Personalized medicine: AI algorithms can be used to analyze medical images to identify the specific genetic mutations that are driving a patient's disease. This information can then be used to select the most effective treatment options for that patient.

AI has the potential to transform healthcare by providing more personalized and accurate diagnoses and treatment plans, ultimately improving patient outcomes.